An article titled “I Compiled a List of Tech’s “Next Big Things” So You Wouldn’t Have to” in 2018. As anyone reading it in the 2020s will notice, a lot of what was written then is now obsolete. It is thus necessary to write an update, highlighting the key technologies emerging today that will be all the rage in in 2022, 2025 and 2030s.

Obviously, these dates should be taken with a grain of salt : predictions are wrong more often than not. They are often wrong because we tend to use history, which is at heart the study of surprises and changes, as a guide to the future. This should however not stop us from aiming to better understand the future of technology : the knowledge gained through planning is crucial to the selection of appropriate actions as future events unfold. We don’t know the answer, but we can at least ask useful questions and catalyse the conversation.

By now, we’ve all heard about blockchain revolutionising just about every industry imaginable. Banking, politics, healthcare… all could technically benefit from the creation of a decentralised digital ledger which tracks and stores information in various places, thus making forgery impossible. Identification is provided through complex calculations, making identity theft virtually impossible, too.

There is however one word which stands out in the description above. Decentralised. Banks, governments, hospitals… these institutions don’t want to see their power curtailed (unless on their own terms). As such, it is likely that we will see some advances in the blockchain space, but it will remain on the fringes of technology, missing the revolution predicted by its (many) fans.

More on blockchain here [Investopedia].

Often mentioned in the same breath as blockchain, cryptocurrencies use the principles explained above to facilitate the exchange of goods and services online (again in a decentralised fashion, which is one of its main appeals).

Sounds fantastic, but there are two big issues with cryptocurrencies :

-

It’s key appeal (excluding illegal dealings) is that it’s cool and trendy. It was never meant to sustain the attention it got in 2017, and will never recover from the crypto-bros’ unrelenting idiocy. The technology works, there’s just no mass market.

-

Secondly, its value is VERY subjective (unlike gold, don’t @ me). Crypto-currencies are always either in pre-bubble or bubble territory. Add to that the decentralised aspect that governments and banks will seek to discredit, and you can be sure that it will continue to be a mere toy Chad keeps bringing up at frat parties (there’s always a Chad).

More on cryptocurrency

here [

Investopedia].

Artificial Intelligence is already everywhere in 2020; it’s just not as fun as we thought it’d be. If you’ve missed the AI train, it can be described as follows : the increase in storage space (cloud), calculation capabilities (chips) and access to massive datasets (e-commerce, social media…) has allowed companies to create statistical models on steroid which can evolve when fed new information.

Affective AI would take this process one step further and apply it to emotions. Effectively, an algorithm could tell your mood from the way you look (by training a deep learning algorithm on facial data), the way you write, or the way you speak, and offer a product or service in accordance. Feeling happy ? How about a Starbucks advert for a frappuccino to keep the good times coming ? Feeling down ? How about a Starbucks advert for a frozzen coffee to turn that frown upside down ?

More on Artificial Intelligence here [The Pourquoi Pas], and more on affective computing here [MIT].

Most great technologies aren’t considered to be revolutionary until they reach the public en masse (forgive my French). This may be one of the reasons there’s been so much disappointment in AI of late. Indeed, only major companies have been able to benefit from automating tasks that once required human input, while the petit peuple is forced to continue using comparatively medieval algorithms. This can in part be explained by a lack computing power within individual households, but is mostly a data problem.

This may not be the case for long. Companies are realising that renting an algorithm gives the double benefit of generating extra revenue from an existing asset, while extracting more data from customers to feed the beast. As such, get ready to witness the rise of AI platforms and marketplaces, which will promise to provide algorithms that specifically match unique customer pain points (chatbots and digital assistants are only the beginning). As devs get automated and join the gig economy, this movement is likely to expand exponentially. This would allow smaller companies, and even individuals, to optimise their day-to-day processes. If that seems harmful to our collective mental health, follow your instincts.

More on AI as a service here [Towards Data Science].

The trend of artificial intelligence in our homes is already ongoing, and will only accelerate over the next few years. In fact, we’ve already become accustomed to Google’s Nest and Amazon’s Alexa being able to adjust the settings of smart objects within our houses to fit pre-set parameters.

But these two use cases are just the beginning : as with most things internet-related, these services benefit from network effects, and will exponentially gain customer value as functionalities are added. An algorithm that can make a cup of coffee while opening the blinds and increasing the bathroom temperature when it senses someone waking up is a lot more valuable than the sum of three different algorithms doing these tasks.

More on connected homes here [McKinsey]

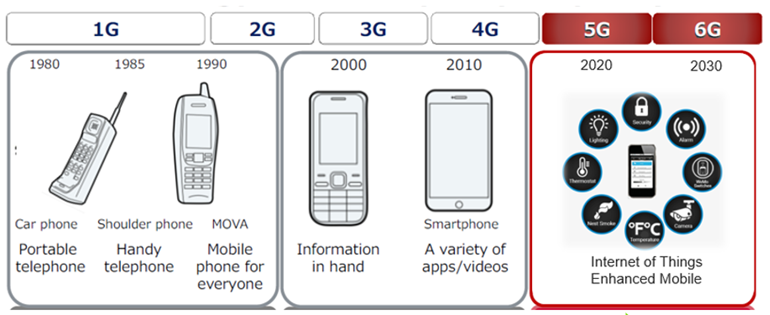

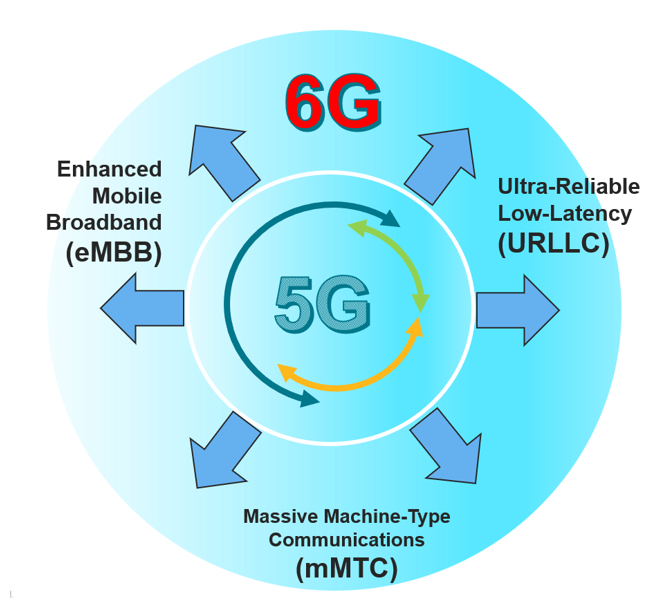

Of course, connected objects cannot afford to be as laggy as the original iPhone (shots fired) : they must transmit massive amounts of data quickly and reliably. That’s where 5G comes in.

5G is the logical successor to 4G, and achieves much greater speeds thanks to higher-frequency radio waves. Though this seems simple enough, a few terms have to be understood to fully capture the difficulty of implementing 5G throughout the world.

-

Millimeter waves : this refers to a specific part of the radio frequency spectrum between 24GHz and 100GHz, which have a very short wavelength. Not only is this section of the spectrum pretty much unused, but it can also transfer data incredibly fast, though its transfer distance is shorter.

-

Microcells, femtocells, picocells : Small cell towers which act as relays within comparatively small areas such as large buildings. This infrastructure is necessary : as highlighted above, 5G transfer distance is much shorter than that of 4G (and struggles to go through thick walls).

-

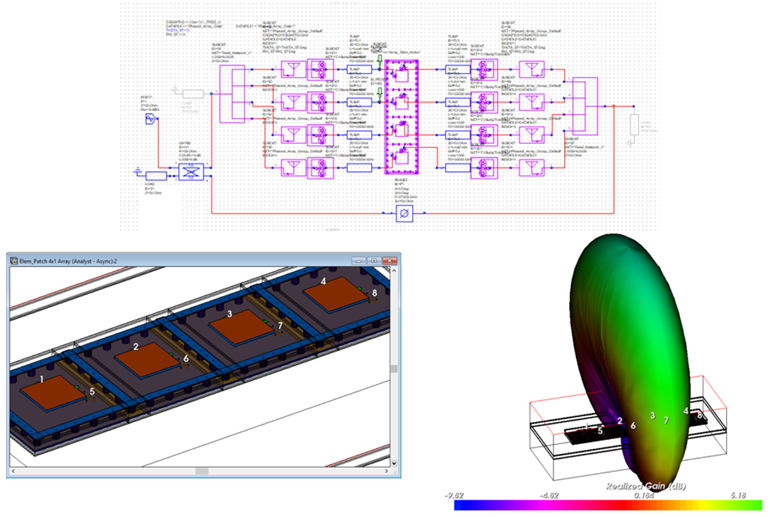

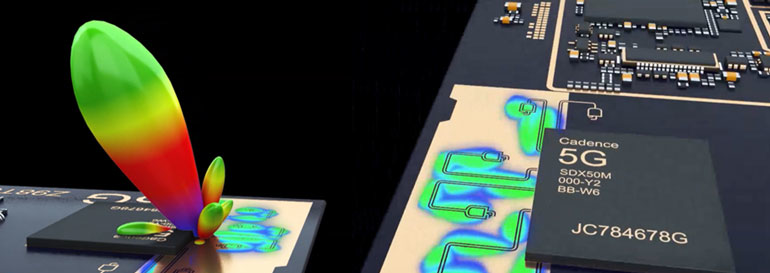

Massive MIMO : The ability to transfer and receive much more data than when using 4G, from a wider variety of sources.

-

Beamforming : all these transfers need to be organised and choreographed. Beamforming does just that. Also, it sounds cool.

-

Full Duplex : the ability to send and receive data at the same time, on the same wavelength.

The technology will have a huge effect on most industries as it will change orders of magnitude in terms of the speed and quantity of data transmitted, as well as the quality of the connection. It will, among other things, connect autonomous vehicles and drones to the internet, but will also allow major advances in virtual reality and IoT. 5G is therefore not a technology that should be taken lightly.

More on 5G here [CNN]

Speaking of Internet… Over the next few years, SpaceX plans to deploy up to 42,000 satellites to create an Internet connection anywhere on the planet. The company isn’t alone in this niche: the OneWeb constellation aims to include 600 satellites by 2022, and Amazon has announced plans to launch 3,236 low-orbit satellites to cover white areas.

All this is made possible thanks to the low cost of launching these nanosatellites, which weigh barely a few pounds. A lower altitude would also make managing fleets a lot easier and cleaner.

The deployment in space of so many objects, however, poses problems in terms of interference with other satellite services, increasing the risk of collision and disturbing astronomical observation.

More on mega-constellations here [The Verge]

2020 was supposed to be the year of the autonomous car. That’s not worked out quite as expected. The “coronavirus setback” will however not dampen large companies’ spirits, which will continue to update their algorithms to create cars that do away with drivers entirely.

As a quick reminder, it is generally agreed that there are 5 levels of autonomous driving, ranging from “no automation” to “full automation”. Level 0 to 2 require extensive human monitoring,while levels 3 to 5 rely on algorithms to monitor the driving environment. The most advanced autonomous cars on the market (Tesla) are currently straddling level 3 and 4. It is hoped that we can make the jump to level 5 (and full driving automation) by 2025, if not earlier. But the road ahead is long, as issues ranging from ethical dilemmas to statistical headaches still plague the industry.

Even if level 5 is reached, it’s likely that we will never truly replace the cars as we know it, but instead create special roads and spaces for autonomous cars, so that the two don’t mix. Indeed, the car as we know it is so central to our daily lives that changing it may mean rebuilding most of our daily world : parking would become less important, charging stations would change, the ways pedestrians interact with safer roads would be forever altered…

More on Autonomous vehicles here [Spectrum].

First things first : scientists have been announcing the arrival of the quantum computer for over 50 years. But this time might be it. In October 2019, Google announced that it had achieved quantum supremacy (superiority of a quantum computer compared to a conventional computer on a particular task) by performing in three minutes a calculation which would require approximately 10,000 years on a conventional supercomputer. These figures were challenged by IBM, which estimates that a conventional computer program could have solved it in just 2.5 days.

Quantum computers, where bits are replaced by qubits with superimposable states (ex : a 0 can also be a 1 at the same time), are in theory much faster and more efficient than their older brothers, but tend to suffer from decoherence issues (loss of information). Nevertheless, developing them for pharmaceutical companies, for example, could theoretically lead to major breakthroughs in medicine creation.

More interestingly, quantum computers could easily figure out encrypted blockchain passwords, making the whole thing irrelevant (did I not say earlier that Bitcoin was doomed).

More on Quantum computing here [MIT Technology Review]. You can also take a Quantum computing class here [Qmunity].

The raw computing power highlighted above can be used to analyse one’s genome and predict one’s chances of getting conditions such as heart disease or breast cancer. If that sounds exactly like the plot of Gattaca, trust your instincts.

Regardless of the risks of genetic discrimination, DNA-based “predictions” could be the next great public health leap. For example, if women at high risk for breast cancer got more mammograms and those at low risk got fewer, those exams might catch more real cancers and set off fewer false alarms, leading to a better treatment rate and lower insurance premia.

It could also lead to the rise of personalised medicine, though the logistics of such a task would likely be a financial and logistical disaster given the current political climate (at least in the US).

More on Genetic predictions here [MIT Technology Review].

Even if a Gattaca-like future does come about from genetic predictions, we might still create a similar situation through straight up genetic engineering. CRISPR (Clustered Regularly Interspaced Short Palindromic Repeats) allows researchers to easily alter DNA sequences and modify gene functions. Its many potential applications include correcting genetic defects, treating and preventing the spread of diseases and improving crops.

Editing germs to make new viruses or a “master race” is however a less fun prospect, should this technology get into unethical hands. Either way, I look forward to a time when every man looks like a mix of Tom Hiddleston and Idris Elba.

More on CRISPR here [Live Science].

Thankfully, going genetic is not the answer to everything. Sometimes, some good old ingenuity and robotics is enough to solve our issues.

Slowly but surely, we are seeing more and more natural, artificial, or technological alteration of the human body in order to enhance physical or mental capabilities, often in the form of bionic limbs. As we begin to better understand how the brain transmits information to the body, more and more companies will begin to see the value of improving people’s life (for a steep fee) and descend upon this space.

It’s very likely that beyond the arms and legs augmentations that we’re already starting to see, there will be a point at which the back and the eyes are also augmented. Then, slowly but surely, augmentations will become elective, with interesting ethical implications.

More on human enhancements

here [

International Journal of Human-Computer Studies]

Though graphene has been over-hyped for so many years, we’re finally seeing something good come out of it. If you haven’t paid attention to the hype, graphene is a byproduct of graphite, which is itself carbon’s very close cousin. It is extremely strong, yet extremely thin, light and flexible (stronger than steel, thinner than paper). Oh, and it also conducts electricity really well.

The applications are numerous, specifically for wearable electronics and space travel, where resistance and weight is a key component. Nevertheless, it will take many years to get to a wide array of use cases : we’ve built the world around silicon, and it’s very had to displace that kind of well-established, mature technology.

More on graphene here [Digital Trends]

While the vast majority of data processing for connected devices now happens in the cloud, constantly sending data back and forth can take far too long (as much as a few seconds, sometimes). 5G is a temporary answer, as mentioned above, but there might be a simpler solution : allowing objects to process data on their own, without using Cloud technology (at the”edge” of the eco-system). This would unlock a wide variety of issues in manufacturing, transport, and healthcare, where split-second decisions are key to a variety of process. Even fashion could benefit by creating self-sufficient smart wearables.

As intelligent “things” proliferate, expect a shift from stand-alone intelligent objects to swarms of collaborative intelligent things. In this model, multiple devices would work together, either independently or with human input by grouping together their computing power. The leading edge of this area is being used by the military, which is studying the use of drone swarms to attack or defend military targets, but could likely go much further with hundreds of potential civilian uses.

The technology is nearly available, but as with other developments both above and below, we must first let the hardware capabilities catch up before implementing these ideas.

More on Edge computing here [The Verge]

The current main idea behind micro-chips (which are made from an array of molecular sensors on the chip surface that can analyze biological elements and chemicals) is for tracking biometrics in a medical context. It has also seen use cases emerge within the smart workspace technology ecosystem. It could however have a much wider appeal if customers decide to put their trust into it (such as banking - imagine never having to bring your wallet anywhere ever again).

Unless everyone suddenly agrees to let their blood pressure be monitored daily at work, this type of tracking is likely to remain benign in the near future. One might nevertheless imagine them becoming fairly commons in hospitals.

More on micro-chips here [The Guardian].

For those wanting to go even smaller than micro-chips, allow me to introduce nanorobots. Currently in R&D phases in labs throughout the world, nanorobots are essentially very, very tiny sensors with very limited processing power.

The first useful applications of these nanomachines may very well be in nanomedicine. For example, biological machines could be used to identify and destroy cancer cells or deliver drugs. Another potential application is the detection of toxic chemicals, and the measurement of their concentrations, in the environment.

More on Nanorobotic here [Nature].

Tattoos that can send signals via touch to interact with the world around us makes a lot of sense :

-

It’s wearable which allows for a greater freedom of movement

-

It tackles the issue of waste, which is seldom discussed when imagining the future of technology

-

It can be personalised, a trend towards which we’ve been moving for 15 years now.

In their current form, they would be temporary on the skin. They can however last much longer on prosthetic, and have the benefit of being cheap compared to a lot of the hardware available out there.

More on Smart Tattoos here [Microsoft]

Do you want your great-grand-kids to know what it’s like not to despise the sun? Then forget about all the above and concentrate on Green Tech : the science of making the world liveable. Because so much is being done in this space, we will avoid the details, and refer to better sources :

The issue with most of the above is that they tend to work well in theory, but their adoption cost is incredibly high, as they often struggle to scale. As much as we’d like to see all of them being implemented yesterday, the road ahead is still long.

More on Green Tech here [CB Insights]

In a fuel cell, hydrogen combines with oxygen in the air to produce electricity, releasing only water. In itself, this isn’t new, as this principle was discovered in 1839; up until a few years ago, this idea was not profitable enough to allow for their large-scale use.

In fact, there are still some issues with the technology, as it’s easy to store a small amount of energy (hence its use in the space exploration industry), but incredibly hard to do at a larger scale.

See you in 2030 to see if we’ve solved these issues.

More on Hydrogen fuel cells here [FCHEA].

I’ve tried it : lab-made meat smells, looks and tastes just like meat (beyond the odd uncanny-valley-like taste). The only things that change : healthier food, no antibiotics, no growth hormones, no emission of greenhouse gases and no animal suffering.

Above all, this is a gigantic market that whets the appetites of industrialists. After targeting vegetarians, they’ve realised that it’s much easier and rewarding to market these products to flexitarians (back in my days we called them omnivores).

By 2030, 10% of the meat eaten in the world will no longer come from an animal (allegedly). The principle is there, the technology works… all that’s left to see is if it will be widely adapted.

More on Meatless meat here [Vox].

Technology has a tendency to hold a dark mirror to society, reflecting both what’s great and evil about its makers. It’s important to remember that technology is often value-neutral : it’s what we do with it day in, day out that defines whether or not we are dealing with the “next big thing”.

Good luck out there.

Source: https://www.thepourquoipas.com/post/the-next-big-thing-in-technology-20-inventions 23 07 20

Tags: AI Cloud Services, Autonomous Vehicles, Beamforming, chatbots, Connected homes, CRISPR, Cryptocurrency, Data-as-a-service, Femtocells, Full Duplex, Genetic predictions, Graphene, Hydrogen fuel cells, Low-earth orbit satellite systems, Massive MIMO, Mega-constellations of satellites, Microcells, Millimeter Waves, Nanorobotics, PaaS, Picocells, quantum computing, Smart Homes, SpaceX