Being ‘locked in’ to a proprietary RAN has put mobile network operators (MNOs) at the mercy of network equipment manufacturers.

Throughout most of cellular communications history, radio access networks (RANs) have been dominated by proprietary network equipment from the same vendor or group of vendors. While closed, single-vendor RANs may have offered some advantages as the wireless communications industry evolved, this time has long since passed. Being “locked in” to a proprietary RAN has put mobile network operators (MNOs) at the mercy of network equipment manufacturers and become a bottleneck to innovation.

Eventually, the rise of software-defined networking (SDN) and network function virtualization (NFV) brought to the network core greater agility and improved cost efficiencies. But the RAN, meanwhile, remained a single-vendor system.

In recent years, global MNOs have pushed the adoption of an open RAN (also known as O-RAN) architecture for 5G. The adoption of open RAN architecture offers a ton of benefits but does impose additional technical complexities and testing requirements.

This article examines the advantages of implementing an open RAN architecture for 5G. It also discusses the principles of the open RAN movement, the structural components of an open RAN architecture, and the importance of conducting both conformance and interoperability testing for open RAN components.

The case for open RAN

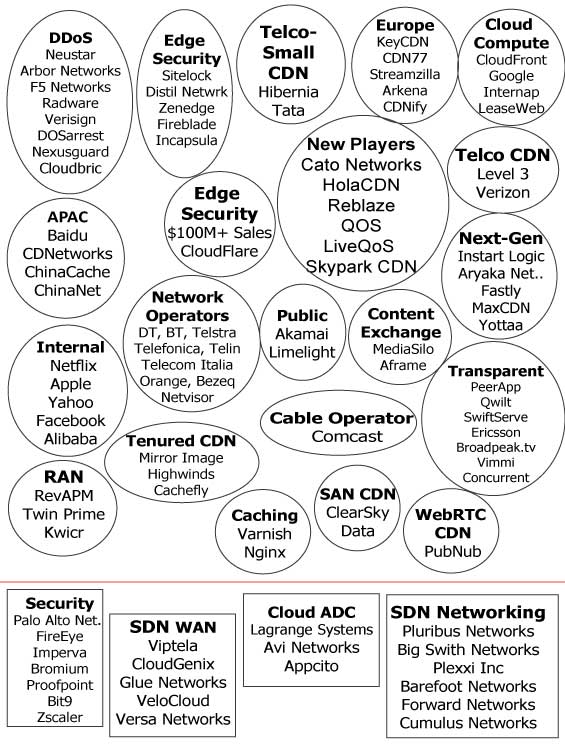

The momentum of open RAN has been so forceful that it can be challenging to track all the players, much less who is doing what.

The O-RAN Alliance — an organization made up of more than 25 MNOs and nearly 200 contributing organizations from across the wireless landscape — has since its founding in 2018 been developing open, intelligent, virtualized, and interoperable RAN specifications. The Telecom Infra Project (TIP) — a separate coalition with hundreds of members from across the infrastructure equipment landscape —maintains an OpenRAN project group to define and build 2G, 3G, and 4G RAN solutions based on general-purpose hardware-neutral hardware and software-defined technology. Earlier this year, TIP also launched the Open RAN Policy Coalition, a separate group under the TIP umbrella focused on promoting policies to accelerate and spur adoption innovation of open RAN technology.

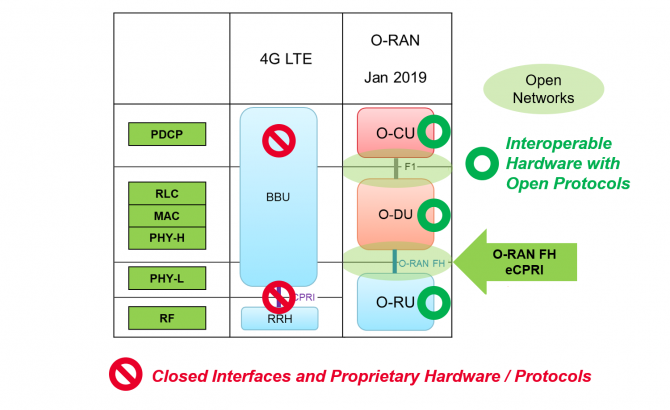

Figure 1. The major components of the 4G LTE RAN versus the O-RAN for 5G. Source: Keysight Technologies

In February, the O-RAN Alliance and TIP announced a cooperative agreement to align on the development of interoperable open RAN technology, including the sharing of information, referencing specifications, and conducting joint testing and integration efforts.

The O-RAN Alliance has defined an O-RAN architecture for 5G and has defined a 5G RAN architecture that breaks down the RAN into several sections. Open, interoperable standards define the interfaces between these sections, enabling mobile network operators, for the first time, to mix and match RAN components from several different vendors. The O-RAN Alliance has already created more than 30 specifications, many of them defining interfaces.

Interoperable interfaces are a core principle of open RAN. Interoperable interfaces allow smaller vendors to quickly introduce their own services. They also enable MNOs to adopt multi-vendor deployments and to customize their networks to suit their own unique needs. MNOs will be free to choose the products and technologies that they want to utilize in their networks, regardless of the vendor. As a result, MNOs will have the opportunity to build more robust and cost-effective networks leveraging innovation from multiple sources.

Enabling smaller vendors to introduce services quickly will also improve cost efficiency by creating a more competitive supplier ecosystem for MNOs, reducing the cost of 5G network deployments. Operators locked into a proprietary RAN have limited negotiating power. Open RANs level the playing field, stimulating marketplace competition, and bringing costs down.

Innovation is another significant benefit of open RAN. The move to open interfaces spurs innovation, letting smaller, more nimble competitors develop and deploy breakthrough technology. Not only does this create the potential for more innovation, it also increases the speed of breakthrough technology development, since smaller companies tend to move faster than larger ones.

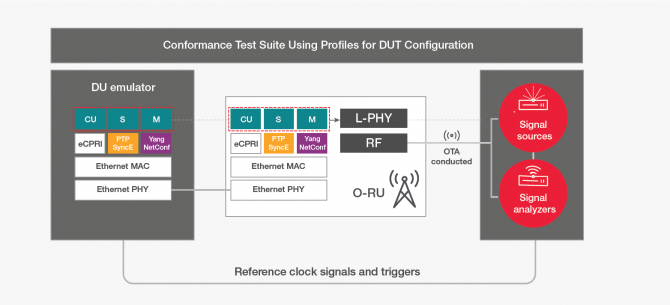

Figure 2. Test equipment radio in the O-RAN conformance specification.

Other benefits of open RAN from an operator perspective may be less obvious, but no less significant. One notable example is in the fronthaul — the transport network of a Cloud-RAN (C-RAN) architecture that links the remote radio heads (RRHs) at the cell sites with the baseband units (BBUs) aggregated as centralized baseband controllers some distance (potentially several miles) away. In the O-RAN Alliance reference architecture, the IEEE Radio over Ethernet (RoE) and the open enhanced CPRI (eCPRI) protocols can be used on top of the O-RAN fronthaul specification interface in place of the bandwidth-intensive and proprietary common public radio interface (CPRI). Using Ethernet enables operators to employ virtualization, with fronthaul traffic switching between physical nodes using off-the-shelf networking equipment. Virtualized network elements allow more customization.

Figure 1 shows the layers of the radio protocol stack and the major architectural components of a 4G LTE RAN and a 5G open RAN. Because of the total bandwidth required and fewer antennas involved, the CPRI data rate between the BBU and RRH was sufficient for LTE. With 5G, higher data rates and the increase in the number of antennas due to massive multiple-input / multiple-output (MIMO) means passing a lot more data back and forth over the interface. Also, note that the major components of the LTE RAN, the BBU and the RRH, are replaced in the O-RAN architecture by O-RAN central unit (O-CU), the O-RAN distributed unit (O-DU), and the O-RAN radio unit (O-RU), all of which are discussed in greater detail below.

The principles and major components of an open RAN architecture

As stated earlier (and implied by the name), one core principle of the open RAN architecture is openness — specifically in the form of open, interoperable interfaces that enable MNOs to build RANs that feature technology from multiple vendors. The O-RAN Alliance is also committed to incorporating open source technologies where appropriate and maximizing the use of common-off-the-shelf hardware and merchant silicon while minimizing the use of proprietary hardware.

A second core principle of open RAN, as described by the O-RAN Alliance, is the incorporation of greater intelligence. The growing complexity of networks necessitates the incorporation of artificial intelligence (AI) and deep learning to create self-driving networks. By embedding AI in the RAN architecture, MNOs can increasingly automate network functions and minimize operational costs. AI also helps MNOs increase the efficiency of networks through dynamic resource allocation, traffic steering, and virtualization.

The three major components of the O-RAN for 5G (and retroactively for LTE) are the O-CU, O-DU, and the O-RU.

- The O-CU is responsible for the packet data convergence protocol (PDCP) layer of the protocol.

- The O-DU is responsible for all baseband processing, scheduling, radio link control (RLC), medium access control (MAC), and the upper part of the physical layer (PHY).

- The O-RU is the component responsible for the lower part of the physical layer processing, including the analog components of the radio transmitter and receiver.

Two of these components can be virtualized. The O-CU is the component of the RAN that is always centralized and virtualized. The O-DU is typically a virtualized component; however, virtualization of the O-DU requires some hardware acceleration assistance in the form of FPGAs or GPUs.

At this point, the prospects for virtualization of the O-RU are remote. But one O-RAN Alliance working group is planning a white box radio implementation using off-the-shelf components. The white box enables the construction of an O-RU without proprietary technology or components.

Interoperability testing required

While the move to open RAN offers numerous benefits for MNOs, making it work means adopting rigorous testing requirements. A few years ago, it was sufficient to simply test an Evolved Node B (eNB) as a complete unit in accordance with 3GPP requirements. But the introduction of the open RAN and distributed RANs change the equation, requiring testing each component of the RAN in isolation for conformance to the standards and testing combinations of components for interoperability.

Why test for both conformance and interoperability? In the O-RAN era, it is essential to determine both that the components conform to the appropriate standards in isolation and that they work together as a unit. Skipping the conformance testing step and performing only interoperability testing would be like an aircraft manufacturer building a plane from untested parts and then only checking to see if it flies.

Conformance testing usually comes first to ensure that all the components meet the interface specifications. Testing each component in isolation calls for test equipment that emulates the surrounding network to ensure that the component conforms to all capabilities of the interface protocols.

Conformance testing of components in isolation offers several benefits. For one thing, conformance testing enables the conduction of negative testing to check the component’s response to invalid inputs, something that is not possible in interoperability testing. In conformance testing, the test equipment can stress the components to the limits of their stated capabilities — another capability not available with interoperability testing alone. Conformance testing also enables test engineers to exercise protocol features that they have no control over during interoperability testing.

The conformance test specification developed by the O-RAN Alliance open fronthaul interfaces working group features several sections with many test categories to test nearly all 5G O-RAN elements.

Interoperability testing of a 5G O-RAN is like interoperability testing of a 4G RAN. Just as 4G interoperability testing amounts to testing the components of an eNB as a unit, the same procedures apply to testing a gNodeB (gNB) in 5G interoperability testing. The change in testing methodology is minimal.

Conformance testing, however, is significantly different for 5G O-RAN and requires a broader set of equipment. For example, the conformance test setup for an O-RU includes a vector signal analyzer, a signal source, and an O-DU emulator, plus a test sequencer for automating the hundreds of tests included in a conformance test suite. Figure 2 shows the test equipment radio in the O-RAN conformance test specification.

Conclusion: Tools and Methodologies Matter

As we have seen, the open RAN movement has considerable momentum and is a reality in the era of 5G. while the adoption of open RAN architecture brings significant benefits in terms of greater efficiency, lower costs, and an increase in innovation. However, the test and validation of a multi-vendor open RAN is no small endeavor. Simply cobbling together a few instruments and running a few tests is not an adequate solution. Testing each section individually to the maximum of its capabilities is critical.

Choosing and implementing the right equipment for your network requires proper testing with the right tools, methodologies, and strategies.

Source: https://www.ept.ca/features/the-importance-of-interoperability-testing-for-o-ran-validation/ 06 04 21