As 5G continues to roll out, work is already well underway on its successor. 6G wireless technology brings with it a promise for a better future. Among other goals, 6G technology intends to merge the human, physical, and digital worlds. In doing so, there is a hope that 6G can significantly aid in achieving the UN Sustainable Development Goals.

This article answers some of the most common questions surrounding 6G and provides more insight into the vision for 6G and how it will achieve these critical goals.

1. What is 6G?

In a nutshell, 6G is the sixth generation of the wireless communications standard for cellular networks that will succeed today’s 5G (fifth generation). The research community does not expect 6G technology to replace the previous generations, though. Instead, they will work together to provide solutions that enhance our lives.

While 5G will act as a building block for some aspects of 6G, other aspects need to be new for it to meet the technical demands required to revolutionize the way we connect to the world in a fashion.

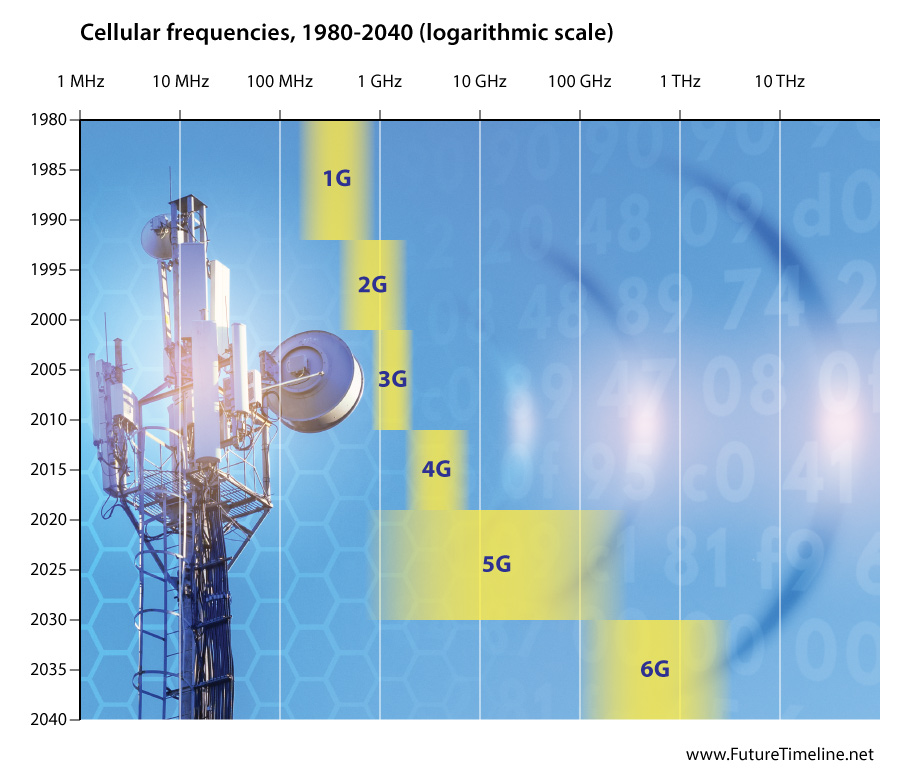

The first area of improvement is speed. In theory, 5G can achieve a peak data rate of 20 Gbps even though the highest speeds recorded in tests so far are around 8 Gbps. In 6G, as we move to higher frequencies – above 100 GHz – the goal peak data rate will be 1,000 Gbps (1 Tbps), enabling use cases like volumetric video and enhanced virtual reality experiences.

In fact, we have already demonstrated an over-the-air transmission at 310 GHz with speeds topping 150 Gbps.

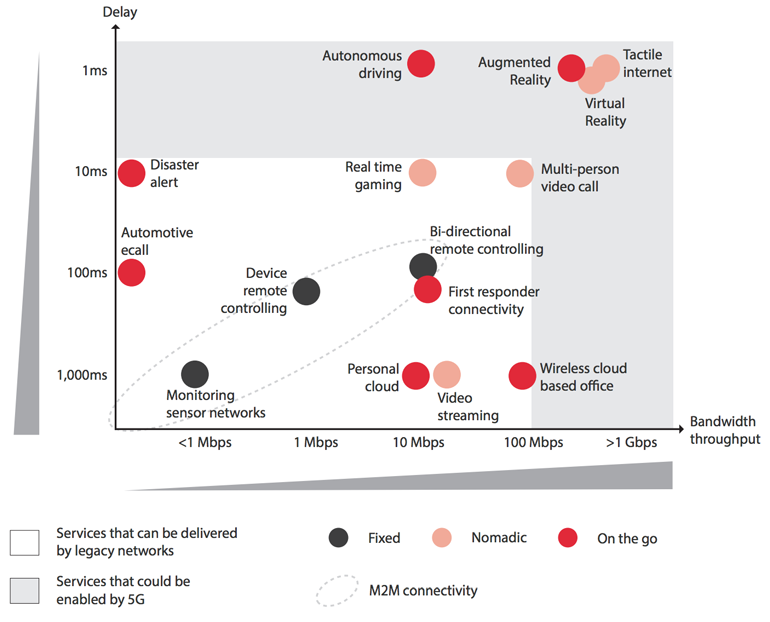

In addition to speed, 6G technology will add another crucial advantage: extremely low latency. That means a minimal delay in communications, which will play a pivotal role in unleashing the internet of things (IoT) and industrial applications.

6G technology will enable tomorrow’s IoT through enhanced connectivity. Today’s 5G can handle one million devices connected simultaneously per square kilometer (or 0.38 square miles), but 6G will make that figure jump up to 10 million.

But 6G will be much more than just faster data rates and lower latency. Below we discuss some of the new technologies that will shape the next generation of wireless communications.

2. Who will use 6G technology and what are the use cases?

We began to see the shift to more machine-to-machine communication in 5G, and 6G looks to take this to the next level. While people will be end users for 6G, so will more and more of our devices. This shift will affect daily life as well as businesses and entire industries in a transformational way.

Beyond faster browsing for the end user, we can expect immersive and haptic experiences to enhance human communications. Ericsson, for example, foresees the emergence of the “internet of senses,” the possibility to feel sensations like a scent or a flavor digitally. According to one Next Generation Mobile Networks Alliance (NGMN) report, holographic telepresence and volumetric video – think of it as video in 3D – will also be a use case. This is all so that virtual, mixed, and augmented reality could be part of our everyday lives.

However, 6G technology will likely have a bigger impact on business and industry – benefiting us, the end users, as a result. With the ability to handle millions of connections simultaneously, machines will have the power to perform tasks they cannot do today.

The NGMN report anticipates that 6G networks will enable hyper-accurate localization and tracking. This could bring advancements like allowing drones and robots to deliver goods and manage manufacturing plants, improving digital health care and remote health monitoring, and enhancing the use of digital twins.

Digital twin development will be an interesting use case to keep an eye on. It is an important tool that certain industries can use to find the best ways to fix a problem in plants or specific machines – but that is just the tip of the iceberg. Imagine if you could create a digital twin of an entire city and perform tests on the replica to assess which solutions would work best for problems like traffic management. Already in Singapore, the government is working to build a 3D city model that will enable a smart city in the future.

3. What do we need to achieve 6G?

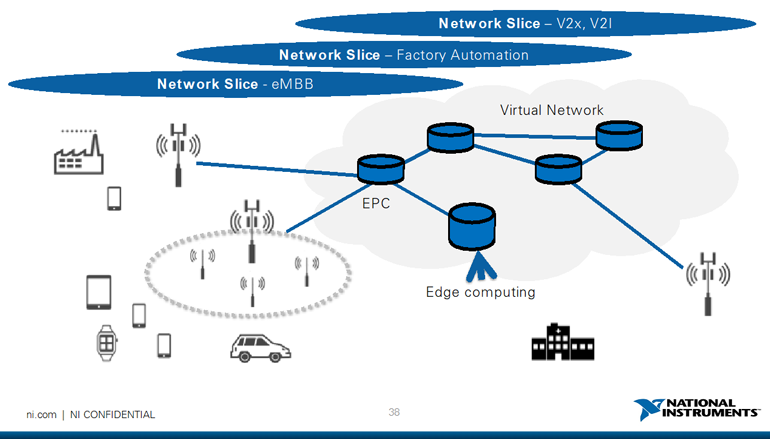

New horizons ask for new technology. It is true that 6G will greatly benefit from 5G in areas such as edge computing, artificial intelligence (AI), machine learning (ML), network slicing, and others. At the same time, we need changes to match new technical requirements.

The most sensible demand is understanding how to work in the sub terahertz frequency. While 5G needs to operate in the millimeter wave (mmWave) bands of 24.25 GHz to 52.6 GHz to achieve its full potential, the next generation of mobile connectivity will likely move to frequencies above 100 GHz in the ranges called sub-terahertz and possibly as high as true terahertz.

Why does this matter? Because as we go up in frequency, the wave behaves in a different way. Before 5G, cellular communications used only spectrum below 6GHz, and these signals can travel up to 10 miles. As we go up into the mmWave frequency band, the range is dramatically reduced to around 1,000 feet. With sub THz signals like those being proposed for 6G, the distance the waves can travel tends to be smaller – think 10s to 100s of feet not 1000s.

That said, we can maximize the signal propagation and range by using new types of antennas. An antenna’s size is proportional to the signal wavelength, so as the frequency gets higher and the wavelength gets shorter, antennas are small enough to be deployed in a large number. In addition, this equipment uses a technique known as beamforming – directing the signal toward one specific receiver instead of radiating out in all directions like the omnidirectional antennas commonly used prior to LTE.

Another area of interest is designing 6G networks for AI and ML. 5G networks are starting to look at adding AI and ML to existing networks, but with 6G we have the opportunity to build networks from the ground up that are designed to work natively with these technologies.

According to one International Telecommunication Union (ITU) report, the world will generate over 5,000 exabytes of data per month by 2030. Or 5 billion terabytes a month. With so many people and devices connected, we will have to rely on AI and ML to perform tasks such as managing data traffic, allowing smart industrial machines to make real-time decisions and use resources efficiently, among other things.

Another challenge 6G aims to tackle is security – how to ensure the data is safe and that only authorized people can have access to it – and solutions to make systems foresee complex attacks automatically.

One last technical demand is virtualization. As 5G evolves, we will start to move to the virtual environment. Open RAN (O-RAN) architectures are moving more processing and functionality into the cloud today. Solutions like edge computing will be more and more common in the future.

4. Will 6G technology be sustainable?

Sustainability is at the core of every conversation in the telecommunications sector today. It is true that as we advance 5G and come closer to 6G, humans and machines will consume increasing data. Just to give you an idea of our carbon footprint in the digital world, one simple email is responsible for 4 grams of carbon dioxide in the atmosphere.

However, 6G technology is expected to help humans improve sustainability in a wide array of applications. One example is by optimizing the use of natural resources in farms. Using real-time data, 6G will also enable smart vehicle routing, which will cut carbon emissions, and better energy distribution, which will increase efficiency.

Also, researchers are putting sustainability at the center of their 6G projects. Components like semiconductors using new materials should decrease power consumption. Ultimately, we expect the next generation of mobile connectivity to help achieve the United Nations’ Sustainable Development Goals.

5. When will 6G be available?

The industry consensus is that the first 3rd Generation Partnership Project (3GPP) standards release to include 6G will be completed in 2030. Early versions of 6G technologies could be demonstrated in trials as early as 2028, repeating the 10-year cycle we saw in previous generations. That is the vision made public by the Next G Alliance, a North American initiative of which Keysight is a founding member, to foster 6G development in the United States and Canada.

Before launching the next generation of mobile connectivity into the market, international bodies discuss technical specifications to allow for interoperability. This means, for example, making sure that your phone will work everywhere in the world.

The ITU and the 3GPP are among the most well-known standardization bodies and hold working groups to assess research on 6G globally. Federal agencies also play a significant role, regulating and granting spectrum for research and deployment.

Amid all this, technology development is another aspect to keep in mind. Many 6G capabilities demand new solutions that often use nontraditional materials and approaches. The process of getting these solutions in place will take time.

The good news? The telecommunications sector is making fast progress toward the next G.

Here at Keysight, for instance, we are leveraging our proven track record of collaboration in 5G and Open RAN to pioneer solutions needed to create the foundation of 6G. We partner with market leaders to advance testing and measurement for emerging 6G technologies. Every week, we come across a piece of news informing that a company or a university has made a groundbreaking discovery.

The most exciting thing is that we get an inch closer to 6G every day. Tomorrow’s internet is being built today. Join us in this journey; it is just the beginning.

Learn more about the latest advancements in 6G research.

View additional multimedia and more ESG storytelling from Keysight Technologies on 3blmedia.com.

SOURCE: Keysight Technologies – https://www.accesswire.com/717630/Top-Five-Questions-About-6G-Technology – 28 09 22

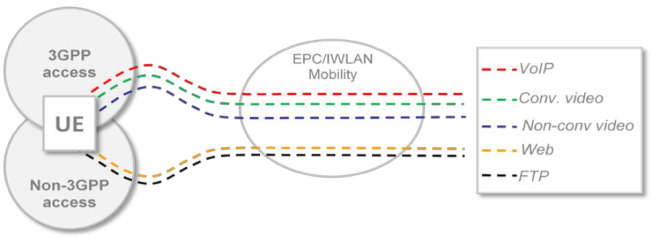

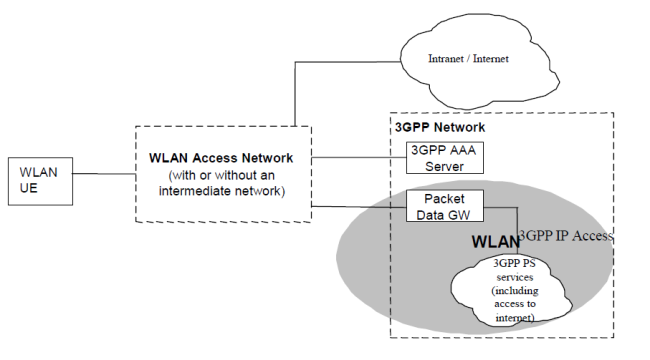

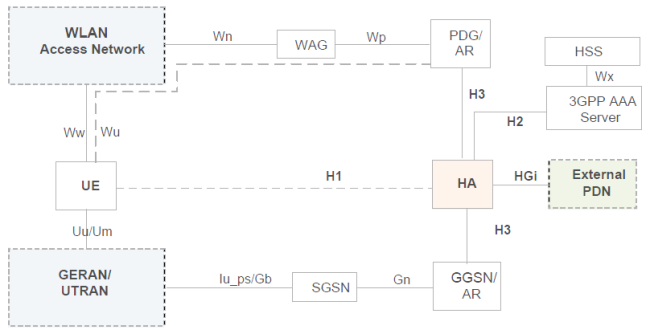

Figure 2: 3GPP release 8 WLAN Seamless Mobility

Figure 2: 3GPP release 8 WLAN Seamless Mobility