Introduction to Massive MIMO

Exponential growth in the number of mobile devices and the amount of wireless data they consume is driving researchers to investigate new technologies and approaches to address the mounting demand. The next generation of wireless data networks, called the fifth generation or 5G, must address not only capacity constraints but also existing challenges—such as network reliability, coverage, energy efficiency, and latency—with current communication systems. Massive MIMO, a candidate for 5G technology, promises significant gains in wireless data rates and link reliability by using large numbers of antennas (more than 64) at the base transceiver station (BTS). This approach radically departs from the BTS architecture of current standards, which uses up to eight antennas in a sectorized topology. With hundreds of antenna elements, massive MIMO reduces the radiated power by focusing the energy to targeted mobile users using precoding techniques. By directing the wireless energy to specific users, radiated power is reduced and, at the same time, interference to other users is decreased. This is particularly attractive in today’s interference-limited cellular networks. If the promise of massive MIMO holds true, 5G networks of the future will be faster and accommodate more users with better reliability and increased energy efficiency.

With so many antenna elements, massive MIMO has several system challenges not encountered in today’s networks. For example, today’s advanced data networks based on LTE or LTE-Advanced require pilot overhead proportional to the number of antennas. Massive MIMO manages overhead for a large number of antennas using time division duplexing (TDD) between uplink and downlink assuming channel reciprocity. Channel reciprocity allows channel state information obtained from uplink pilots to be used in the downlink precoder. Additional challenges in realizing massive MIMO include scaling data buses and interfaces by an order of magnitude or more and distributed synchronization amongst a large number of independent RF transceivers.

These timing, processing, and data collection challenges make prototyping vital. For researchers to validate theory, this means moving from theoretical work to testbeds. Using real-world waveforms in real-world scenarios, researchers can develop prototypes to determine the feasibility and commercial viability of massive MIMO. As with any new wireless standard or technology, the transition from concept to prototype impacts the time to actual deployment and commercialization. And the faster researchers can build prototypes, the sooner society can benefit from the innovations.

1. Massive MIMO Prototype Synopsis

Outlined below is a complete Massive MIMO Application Framework. It includes the hardware and software needed to build the world’s most versatile, flexible, and scalable massive MIMO testbed capable of real-time, two-way communication over bands and bandwidths of interest to the research community. With NI software defined radios (SDRs) and LabVIEW system design software, the modular nature of the MIMO system allows for growth from only a few nodes to a 128-antenna massive MIMO system. With the flexible hardware, it can be redeployed in other configurations as wireless research needs evolve over time, such as as distributed nodes in an ad-hoc network, or as multi-cell coordinated networks.

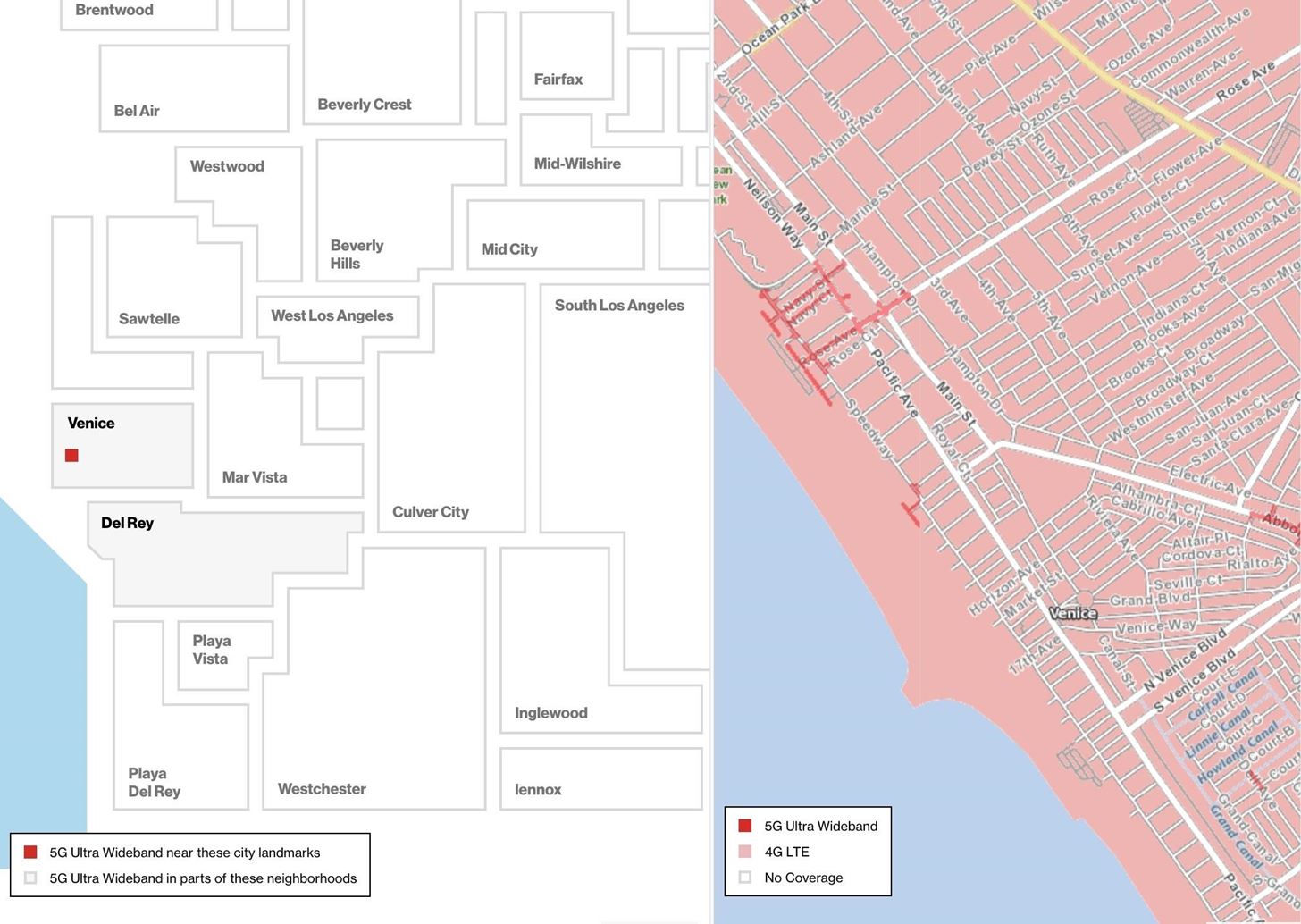

Figure 1. The massive MIMO testbed at Lund University in Sweden is based on USRP RIO (a) with a custom cross-polarized patch antenna array (b).

Professors Ove Edfors and Fredrik Tufvesson from Lund University in Sweden worked with NI to develop the world’s largest MIMO system (see Figure 1) using the NI Massive MIMO Application Framework. Their system uses 50 USRP RIO SDRs to realize a 100-antenna configuration for the massive MIMO BTS described in Table 1. Using SDR concepts, NI and Lund University research teams developed the system software and physical layer (PHY) using an LTE-like PHY and TDD for mobile access. The software developed through this collaboration is available as the software component of the Massive MIMO Application Framework. Table 1 shows the system and protocol parameters supported by the Massive MIMO Application Framework.

Table 1. Massive MIMO Application Framework System Parameters

2. Massive MIMO System Architecture

A massive MIMO system, as with any communication network, consists of the BTS and user equipment (UE) or mobile users. Mass

Massive MIMO envisioned for cellular applications, consists of the BTS and user equipment (UE) or mobile users. Massive MIMO, however, departs from the conventional topology by allocating a large number of BTS antennas to communicate with multiple UEs simultaneously. In the system that NI and Lund University developed, the BTS uses a system design factor of 10 base station antenna elements per UE, providing 10 users with simultaneous, full bandwidth access to the 100 antenna base station. This design factor of 10 base station antennas per UE has been shown to allow for most theoretical gains to be harvested.

In a massive MIMO system, a set of UEs concurrently transmit an orthogonal pilot set to the TS. The BTS received uplink pilots can then be used to estimate the channel matrix. In the downlink time slot, this channel estimate is used to compute a precoder for the downlink signals. Ideally, this results in each mobile user receiving an interference-free channel with the message intended for them. Precoder design is an open area of research and can be tailored to various system design objectives. For instance, precoders can be designed to null interference at other users, minimize total radiated power, or reduce the peak to average power ratio of transmitted RF signals.

Although many configurations are possible with this architecture, the Massive MIMO Application Framework supports up to 20 MHz of instantaneous real-time bandwidth that scales from 64 to 128 antennas and can be used with multiple independent UEs. The LTE-like protocol employed uses a 2,048 point fast Fourier transform (FFT) and 0.5 ms slot time shown in Table 1. The 0.5 ms slot time ensures adequate channel coherence and facilitates channel reciprocity in mobile testing scenarios (in other words, the UE is moving).

Massive MIMO Hardware and Software Elements

Designing a massive MIMO system requires four key attributes:

- Flexible SDRs that can acquire and transmit RF signals

- Accurate time and frequency synchronization among the radio heads

- A high-throughput deterministic bus for moving and aggregating large amounts of data

- High-performance processing for PHY and media access control (MAC) execution to meet the real-time performance requirements

Ideally, these key attributes can also be rapidly customized for a wide variety of research needs.

The NI-based Massive MIMO Application Framework combines SDRs, clock distribution modules, high-throughput PXI systems, and LabVIEW to provide a robust, deterministic prototyping platform for research. This section details the various hardware and software elements used in both the NI-based massive MIMO base station and UE terminals.

USRP Software Defined Radio

The USRP RIO software defined radio provides an integrated 2×2 MIMO transceiver and a high-performance Xilinx Kintex-7 FPGA for accelerating baseband processing, all within a half width-1U rack-mountable enclosure. It connects to a host controller through cabled PCI Express x4 to the system controller allowing up to 800 MB/s of streaming data transfer to the desktop or PXI Express host computer (or laptop at 200 MB/s over ExpressCard). Figure 2 provides a block diagram overview of the USRP RIO hardware.

USRP RIO is powered by the LabVIEW reconfigurable I/O (RIO) architecture, which combines open LabVIEW system design software with high-performance hardware to dramatically simplify development. The tight hardware and software integration alleviates system integration challenges, which are significant in a system of this scale, so researchers can focus on research. Although the NI application framework software is written entirely in the LabVIEW programming language, LabVIEW can incorporate IP from other design languages such as .m file script, ANSI C/C++, and HDL to help expedite development through code reuse.

Figure 2. USRP RIO Hardware (a) and System Block Diagram (b)

PXI Express Chassis Backplane

The Massive MIMO Application Framework uses PXIe-1085, an advanced 18-slot PXI chassis that features PCI Express Generation 2 technologies in every slot for high-throughput, low-latency applications. The chassis is capable of 4 GB/s of per-slot bandwidth and 12 GB/s of system bandwidth. Figure 3 shows the dual-switch backplane architecture. Multiple PXI chassis can be daisy chained together or put in a star configuration when building higher channel-count systems.

Figure 3. 18-Slot PXIe-1085 Chassis (a) and System Diagram (b)

High-Performance Reconfigurable FPGA Processing Module

The Massive MIMO Application Framework uses FlexRIO FPGA modules to add flexible, high-performance processing modules, programmable with the LabVIEW FPGA Module, within the PXI form factor. The PXIe-7976R FlexRIO FPGA module can be used standalone, providing a large and customizable Xilinx Kintex-7 410T with PCI Express Generation 2 x8 connectivity to the PXI Express backplane. Many plug-in FlexRIO adapter modules can extend the platform’s I/O capabilities with high-performance RF transceivers, baseband analog-to-digital converters (ADCs)/digital-to-analog converters (DACs), and high-speed digital I/O.

Figure 4. PXIe-7976R FlexRIO Module (a) and System Diagram (b)

8-Channel Clock Synchronization

The Ettus Research OctoClock 8-channel clock distribution module provides both frequency and time synchronization for up to eight USRP devices by amplifying and splitting an external 10 MHz reference and pulse per second (PPS) signal eight ways through matched-length traces. The OctoClock-G adds an internal time and frequency reference using an integrated GPS-disciplined oscillator (GPSDO). Figure 4 shows a system overview of the OctoClock-G. A switch on the front panel gives the user the ability to choose between the internal GPSDO and an externally supplied reference. With OctoClock modules, users can easily build MIMO systems and work with higher channel-count systems that might include MIMO research among others.

Figure 5. OctoClock-G Module (a) and System Diagram (b)

3. LabVIEW System Design Environment

LabVIEW provides an integrated tool flow for managing system-level hardware and software details; visualizing system information in a GUI, and developing general-purpose processor (GPP), real-time, and FPGA code; and deploying code to a research testbed. With LabVIEW, users can integrate additional programming approaches such as ANSI C/C++ through call library nodes, VHDL through the IP integration node, and even .m file scripts through the LabVIEW MathScript RT Module. This makes it possible to develop high-performance implementations that are also highly readable and customizable. All hardware and software is managed in a single LabVIEW project, which gives the researcher the ability to deploy code to all processing elements and run testbed scenarios with a single environment. The Massive MIMO Application Framework uses LabVIEW for its high productivity and ability to program and control the details of the I/O via LabVIEW FPGA.

Figure 6. LabVIEW Project and LabVIEW FPGA Application

Massive MIMO BTS Application Framework Architecture

The hardware and software platform elements above combine to form a testbed that scales from a few antennas to more than 128 synchronized antennas. For simplicity, this white paper outlines 64-, 96-, and 128-antenna configurations. The 128-antenna system includes 64 dual-channel USRP RIO devices tethered to four PXI chassis configured in a star architecture. The master chassis aggregates data for centralized processing with both FPGA processors and a PXI controller based on quad-core Intel i7.

In Figure 7, the master uses the PXIe-1085 chassis as the main data aggregation node and real-time signal processing engine. The PXI chassis provides 17 slots open for input/output devices, timing and synchronization, FlexRIO FPGA boards for real-time signal processing, and extension modules to connect to the “sub” chassis. A 128-antenna massive MIMO BTS requires very high data throughput to aggregate and process I and Q samples for both transmit and receive on 128 channels in real time for which the PXIe-1085 is well suited, supporting PCI Generation 2 x8 data paths capable of up to 3.2 GB/s throughput.

Figure 7. Scalable Massive MIMO System Diagram Combining PXI and USRP RIO

In slot 1 of the master chassis, the PXIe-8135 RT controller or embedded computer acts as a central system controller. The PXIe-8135 RT features a 2.3 GHz quad-core Intel Core i7-3610QE processor (3.3 GHz maximum in single-core Turbo Boost mode). The master chassis houses four PXIe-8384 (S1 to S4) interface modules to connect the Sub_n chassis to the master system. The connection between the chassis uses MXI and specifically PCI Express Generation 2 x8, providing up to 3.2 GB/s between the master and each sub node.

The system also features up to eight PXIe-7976R FlexRIO FPGA modules to address the real-time signal-processing requirements for the massive MIMO system. The slot locations provide an example configuration where the FPGAs can be cascaded to support data processing from each of the sub nodes. Each FlexRIO module can receive or transmit data across the backplane to each other and to all the USRP RIOs with < 5 microseconds of latency and up to 3 GB/s throughput.

Timing and Synchronization

Timing and synchronization are important aspects of any system that deploys large numbers of radios; thus, they are critical in a massive MIMO system. The BTS system shares a common 10 MHz reference clock and a digital trigger to start acquisition or generation on each radio, ensuring system-level synchronization across the entire system (see Figure 8). The PXIe-6674T timing and synchronization module with OCXO, located in slot 10 of the master chassis, produces a very stable and accurate 10 MHz reference clock (80 ppb accuracy) and supplies a digital trigger for device synchronization to the master OctoClock-G clock distribution module. The OctoClock-G then supplies and buffers the 10 MHz reference (MCLK) and trigger (MTrig) to OctoClock modules one through eight that feed the USRP RIO devices, thereby ensuring that each antenna shares the 10 MHz reference clock and master trigger. The control architecture proposed offers very precise control of each radio/antenna element.

Figure 8. Massive MIMO Clock Distribution Diagram

Table 2 provides a quick reference of the base station parts list for the 64-, 96-, and 128-antenna systems. It includes hardware devices and cables used to connect the devices as shown in Figure 1.

Table 2. Massive MIMO Base Station Parts List

4. BTS Software Architecture

The base station application framework software is designed to meet the system objectives outlined in Table 1 with OFDM PHY processing distributed among the FPGAs in the USRP RIO devices and MIMO PHY processing elements distributed among the FPGAs in the PXI master chassis. Higher level MAC functions run on the Intel-based general-purpose processer (GPP) in the PXI controller. The system architecture allows for large amounts of data processing with the low latency needed to maintain channel reciprocity. Precoding parameters are transferred directly from the receiver to the transmitter to maximize system performance.

Figure 9. Massive MIMO Data and Processing Diagram

Starting at the antenna, the OFDM PHY processing is performed in the FPGA, which allows the most computationally intensive processing to happen near the antenna. The resulting computations are then combined at the MIMO receiver IP where channel information is resolved for each user and each subcarrier. The calculated channel parameters are transferred to the MIMO TX block where precoding is applied, focusing energy on the return path at a single user. Although some aspects of the MAC are implemented in the FPGA, the majority of it and other upper layer processing are implemented on the GPP. The specific algorithms being used for each stage of the system is an active area of research. The entire system is reconfigurable, implemented in LabVIEW and LabVIEW FPGA—optimized for speed without sacrificing readability.

5. User Equipment

Each UE represents a handset or other wireless device with single input, single output (SISO) or 2×2 MIMO wireless capabilities. The UE prototype uses USRP RIO, with an integrated GPSDO, connected to a laptop using cabled PCI Express to an ExpressCard. The GPSDO is important because it provides improved frequency accuracy and enables synchronization and geo-location capability if needed in future system expansion. A typical testbed implementation would include multiple UE systems where each USRP RIO might represent one or two UE devices. Software on the UE is implemented much like the BTS; however, it is implemented as a single antenna system, placing the PHY in the FPGA of the USRP RIO and the MAC layer on the host PC.

Figure 10. Typical UE Setup With Laptop and USRP RIO

Table 3 provides a quick reference of parts used in a single UE system. It includes hardware devices and cables used to connect the devices as shown in Figure 10. Alternatively, a PCI Express connection can be used if a desktop is chosen for the UE controller.

Table 3. UE Equipment List

Conclusion

NI technology is revolutionizing the prototyping of high-end research systems with LabVIEW system design software coupled with the USRP RIO and PXI platforms. This white paper demonstrates one viable option for building a massive MIMO system in an effort to further 5G research. The unique combination of NI technology used in the application framework enables the synchronization of time and frequency for a large number of radios and the PCI Express infrastructure addresses throughput requirements necessary to transfer and aggregate I and Q samples at a rate over 15.7 GB/s on the uplink and downlink. Design flows for the FPGA simplify high-performance processing on the PHY and MAC layers to meet real-time timing requirements.

To ensure that these products meet the specific needs of wireless researchers, NI is actively collaborating with leading researchers and thought leaders such as Lund University. These collaborations advance exciting fields of study and facilitate the sharing of approaches, IP, and best practices among those needing and using tools like the Massive MIMO Application

References

C. Shepard, H. Yu, N. Anand, E. Li, T. L. Marzetta, R. Yang, and Z. L., “Argos: Practical many-antenna base stations,” Proc. ACM Int. Conf. Mobile Computing and Networking (MobiCom), 2012.

E. G. Larsson, F. Tufvesson, O. Edfors, and T. L. Marzetta, “Massive mimo for next generation wireless systems,” CoRR, vol. abs/1304.6690, 2013.

F. Rusek, D. Persson, B. K. Lau, E. Larsson, T. Marzetta, O. Edfors, and F. Tufvesson, “Scaling Up MIMO: Opportunities and Challenges with Very Large Arrays,” Signal Processing Magazine, IEEE, 2013.

H. Q. Ngo, E. G. Larsson, and T. L. Marzetta, “Energy and spectral efficiency of very large multiuser mimo systems,” CoRR, vol. abs/1112.3810, 2011.

Rusek, F.; Persson, D.; Buon Kiong Lau; Larsson, E.G.; Marzetta, T.L.; Edfors, O.; Tufvesson, F., “Scaling Up MIMO: Opportunities and Challenges with Very Large Arrays,” Signal Processing Magazine, IEEE , vol.30, no.1, pp.40,60, Jan. 2013

National Instruments and Lund University Announce Massive MIMO Collaboration, ni.com/newsroom/release/national-instruments-and-lund-university-announce-massive-mimo-collaboration/en/, Feb. 2014

R. Thoma, D. Hampicke, A. Richter, G. Sommerkorn, A. Schneider, and U. Trautwein, “Identification of time-variant directional mobile radio channels,” in Instrumentation and Measurement Technology Conference, 1999. IMTC/99. Proceedings of the 16th IEEE, vol. 1, 1999, pp. 176–181 vol.1.

Source: http://www.ni.com/white-paper/52382/en/