Introduction

Discussion of Beyond 5G and 6G topics has started in the academic and research communities, and several research projects are now starting to address the future technology requirements. One part of this is the push to higher frequencies and the talk of “Terahertz Technology”. What is behind this drive towards millimetre wave and now Terahertz technology for beyond 5G, and even 6G mobile networks? In this article, we will turn to our trusted colleague Claude Shannon and consider his work on channel capacity and error coding to see how future cellular technologies will address the fundamental limitations that his work has defined.

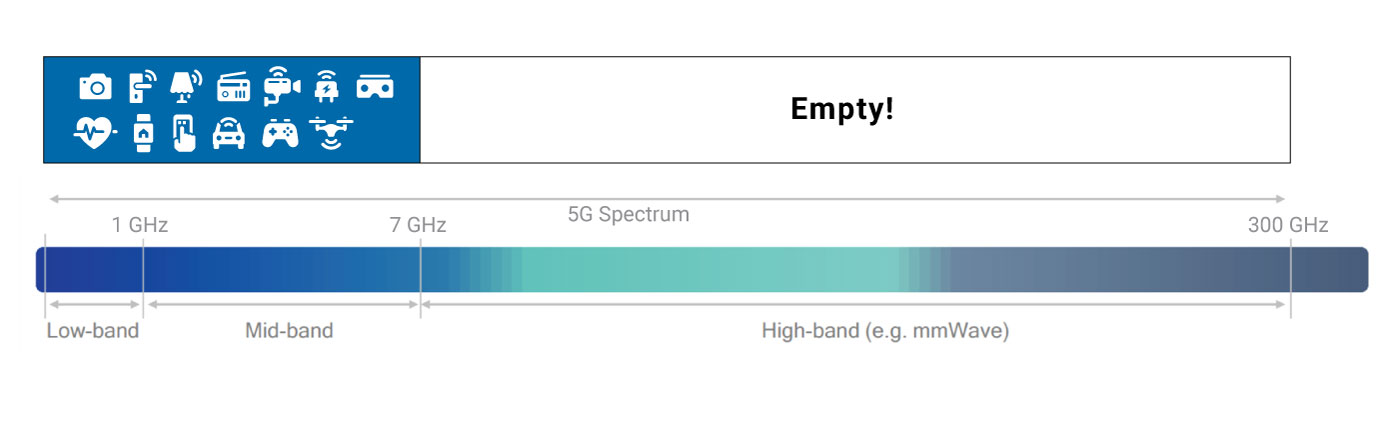

The driver behind this technology trend is the ever-increasing need for more capacity and higher data rates in wireless networks. As there are more and more downloads, uploads, streaming services, and inter-active AR/VR type services delivered on mobile networks, then more capacity and higher data rate is needed to handle this ever-increasing number of services (and always increasing the high resolution and high-definition nature of video). So, one of the main drivers for the future 6G technology is to provide more capacity into the networks.

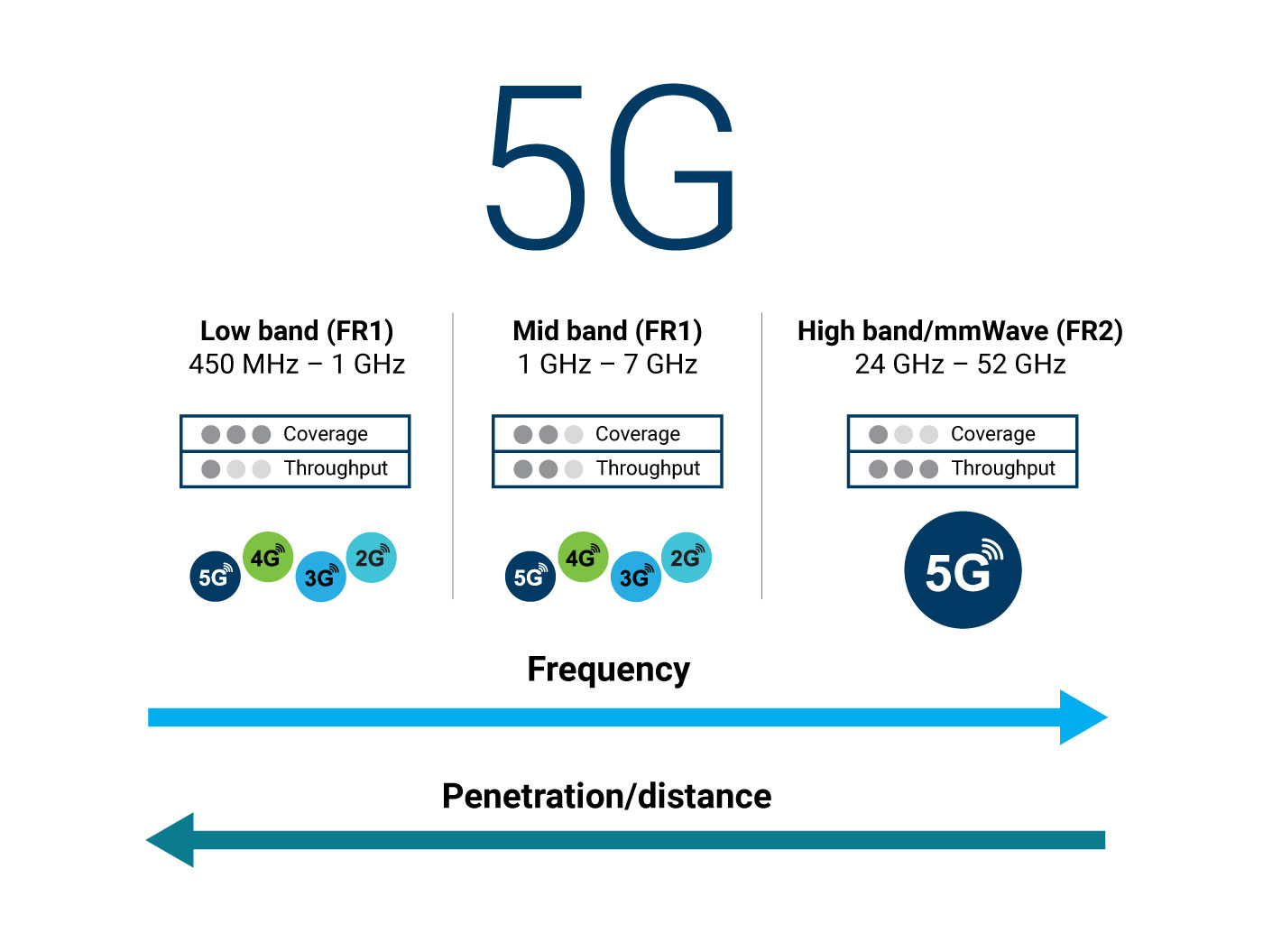

Coverage is usually the other key parameter for wireless network technology. Increase in coverage is generally not seen as a fundamental technology challenge, but more a cost of deployment challenge. Sub 1 GHz networks give good coverage, and now 5G is adding satellite communications (Non-Terrestrial Networks) to provide more cost-effective coverage of hard-to-reach areas. But certainly, the interest in millimetre wave and terahertz technology for 6G is not driven by coverage requirements (quite the opposite really).

Defining channel capacity

The fundamental definition of “Channel Capacity” is laid out in Shannon’s equation, based on the ground breaking paper published in 1948 by Claude Shannon on the principles of information theory and error coding. This defines the theoretical maximum data capacity over a communications medium (a communications channel) in the presence of noise.

Where:

C = Channel Capacity.

B = Channel Bandwidth.

S/N = Signal to Noise Ratio of the received signal.

Clearly then the Channel Capacity is a function of the Channel Bandwidth and of the received Signal to Noise Ratio (SNR). But the important point to note in this equation is that the capacity is a linear function of the bandwidth, but a Logarithmic term of the SNR. We can see that a 10x increase in bandwidth will increase the capacity by 10x, but a 10x increase in SNR will only increase the capacity by 2x. This effect can be seen in figure 1 where we plot capacity versus the linear BW term and the logarithmic SNR term. From this we can quickly see that there appear to be more gains in channel capacity from using more bandwidth, rather than trying to improve SNR. However, there is still considerable interest in optimising the SNR term, so we can maximise the available channel capacity for any given bandwidth that is available for use.

From this we can quickly see that there appear to be more gains in channel capacity from using more bandwidth, rather than trying to improve SNR. However, there is still considerable interest in optimising the SNR term, so we can maximise the available channel capacity for any given bandwidth that is available for use.

This effect is seen clearly in the development and evolution of 5G networks, and even 4G networks. Much focus has been put into ‘Carrier Aggregation’ as this technique directly increases the channel bandwidth. Especially for the downlink, this requires relatively little increase in the UE performance (generally more processing is needed). There has been only small interest in using higher order modulation schemes such as 256 QAM or 1024 QAM, as the capacity gains are less and the required implementation into the UE is more expensive (higher performance transmitter and receiver is required).

Increasing the Channel Bandwidth term in 6G.

As shown in figure 1, the bandwidth term has a direct linear relationship to the channel capacity. So, network operators are wanting to use ‘new’ bandwidth to expand capacity of their networks. Of course, the radio spectrum is crowded and there is only a limited amount of bandwidth available to be used. This search for new bandwidth was seen in the move to 3G (2100 MHz band), and to 4G (800 MHz, 2600 MHz, and re-farming of old 2G/3G bands), and then in 5G there was the move to the millimetre wave bands (24-29 GHz, 37-43 GHz).

As we are considering the absolute bandwidth (Hz) for the channel capacity, if we search to find 100 MHz of free spectrum to use then at 1 GHz band this is very demanding (10% of the available spectrum) whereas at 100 GHz this is relatively easier (0.1% of the available spectrum). Hence, as we move to higher operating frequency then it becomes increasingly easier to find new bandwidth, as the amount of bandwidth that exists is far wider and the chances to find potentially available bandwidth becomes much higher. However, as we move to higher frequencies then the physics of propagation starts to work against us.

As shown in figure 2, the pathloss of radiation from an isotropic antenna is increased by the square of the frequency (f2). We can see that a 10x increase if the operating frequency leads to a 100x increase in losses (20 dB losses) for an isotropic radiation source if the other related parameter of distance is kept constant. This type of loss is usually overcome by having a physically ‘large’ Rx antenna, so by keeping the physical size of the Rx antenna to the same size when we move to higher frequencies, then this loss can be mostly overcome. By using ‘large’ antennas, we have additional antenna gain due to the narrow beam directivity of the antennas, and this helps to overcome the propagation loses. However, this directivity introduces the need for alignment of Tx and Rx beams to complete a radio link, and the consequent alignment error between Tx and Rx beam that must be controlled.

The second type of loss we incur as we move to higher frequencies is the atmospheric attenuation loss. This occurs due to particles in the atmosphere that absorb, reflect, or scatter the radiated energy from the transmitter and so reduce the amount of signal that arrives at the receiver. This type of loss has a strong link between the wavelength (frequency) of the signal and the physical size of the particles in the atmosphere. So as we move to wavelengths of 1mm or less then moisture content (rain, cloud, fog, mist etc) and dust particles (e.g sand) can significantly increase attenuation. In addition, certain molecular structures (e.g. H2O, CO2, O2) have a resonance at specific wavelengths and this causes sharp increases in the attenuation at these resonant frequencies. If we look at the atmospheric attenuation as we move from 10GHz to 1 THz, we therefore see the gradual increase in attenuation caused by the absorption/scattering, and then we see additional peaks super-imposed that are caused by molecular resonances. In-between these resonant frequencies we can find “atmospheric windows” where propagation is relatively good, and these are seen at 35, 94, 140, 220 & 360 GHz regions.

Current 5G activity is including the window around 35 GHz (5G is looking at 37-43 GHz region), and the O2 absorption region at 65 GHz (to enable dense deployment of cells with little leakage of signal to neighbouring cells due to the very high atmospheric losses). Currently the windows around 94 GHz, 140 GHz, and 220 GHz are used for other purposes (e.g. satellite weather monitoring, military and imaging radars) and so studies for 6G are considering also operation up to the 360 GHz region. As we can see from figure 3, atmospheric losses in these regions are up to 10 times higher than existing 38GHz bands, leading to an extra pathloss of 10 dB per kilometre.

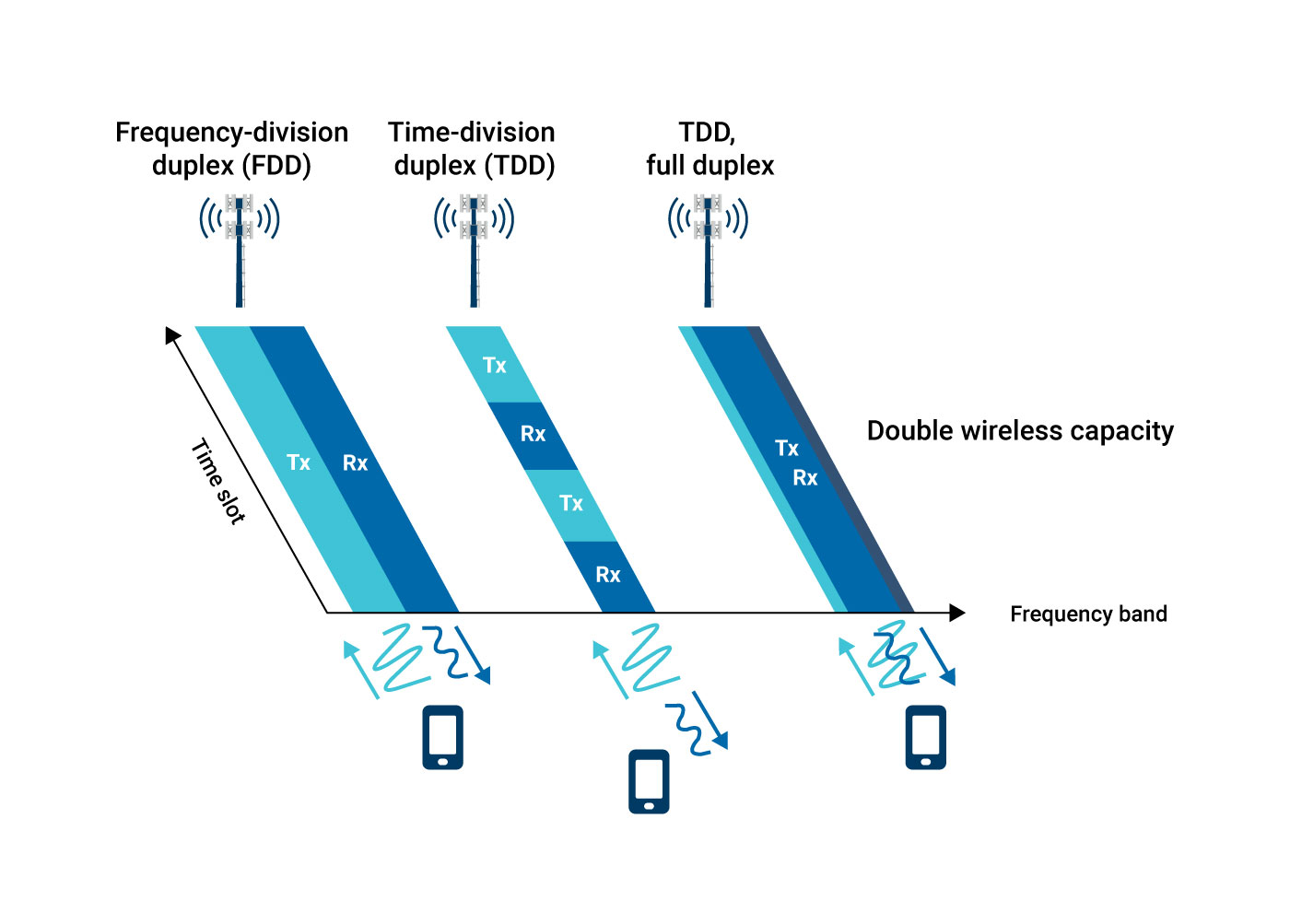

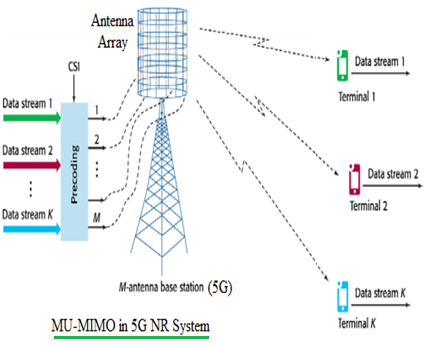

So far we have only considered the ‘real’ physical channel bandwidth. Starting in 3G, and then deployed widely in both 4G and 5G, is the technology called MIMO (Multiple Input Multiple Output). With this technology, we seek to increase the channel bandwidth by creating additional ‘virtual channels’ between transmitter and receiver. This done by having multiple antennas at the transmit side and multiple antennas at the receive side. ‘Spatial multiplexing’ MIMO uses baseband pre- coding of the signals to compensate for the subtle path differences between the sets of Tx and Rx antennas, and these subtle path differences enable separate channels to be created on the different Tx-Rx paths. A 2×2 MIMO system can create 2 orthogonal channels, and hence increase data rate by a factor of 2.

A further step is called ‘Massive MIMO’, where there are significantly more Tx antennas than there are Rx antennas. In this scenario then a single set of Tx antennas can create individual MIMO paths to multiple Rx sides (or vice versa) so that a single Massive MIMO base station may provide MIMO enhanced links to multiple devices simultaneously. This can significantly increase the capacity of the cell (although not increasing the data rate to a single user beyond the normal MIMO rate).

A practical limitation of MIMO is that the orthogonality of the spatial channels must be present, and then must be characterised (by measurements) and then compensated for in the channel coding algorithms (pre-coding matrices). As we move to higher order MIMO with many more channels to measure/code, and if we have more complex channel propagation characteristics at the THz bands, then the computational complexity of MIMO can become extremely high and the effective implementation can limit the MIMO performance gains. For 6G there is great interest in developing new algorithms that can use Artificial Intelligence (AI) and Machine Learning (ML) in the MIMO coding process, so that the computational power of AI/ML can be applied to give higher levels of capacity gain. This should enable more powerful processing to deliver higher MIMO gain in 6G and enable the effective use of MIMO at Terahertz frequencies.

A further proposal that is being considered for future 6G networks is the use of ‘Meta-materials’ to provide a managed/controlled reflection of signals. The channel propagation characteristic, and hence the MIMO capacity gains, are a function of the channel differences (orthogonality) and the ability to measure these differences. This channel characteristic is a function of any reflections that occur along a channel path. Using meta-materials we could actively control the reflections of signals, to create an ‘engineered’ channel path. These engineered channels could then be adjusted to provide optimal reflection of signal for a direct path between Tx and Rx, or to provide an enhanced ‘orthogonality’ to enable high gain MIMO coding to be effective.

The figure 4 shows the difference in a limited BW approach to a wide BW approach for achieving high data rates. The limited BW approach requires very high SNR and high modulation schemes (1024QAM) and high order MIMO (4×4), and even this combination of 1GHz + 1024QAM + 4×4 is not yet realisable in 5G. With the wider BW available in THz regions (e.g. 50GHz) then only a modest SNR level (QPSK) and no MIMO is required to reach much higher data rates. So the clear data rate improvement of wider BW can be easily seen.

Increasing the SNR term in 6G

The detailed operation of the SNR term, and the related modulation coding scheme (MCS), is shown in figure 5. As we increase the SNR in the channel, then it is possible to use a higher order MCS in the channel to enable a higher transmission rate. The use of error correction schemes (e.g. Forward Error Correction, FEC) was established as a means to achieve these theoretical limits when using a digital modulation scheme. As the SNR is reduced, then a particular MCS goes from ‘error free transmission’ to ‘channel limited transmission’ where Shannon’s equation determines the maximum data rate that an error correction process can sustain. This is seen in figure 5, where each MCS type goes from error free to the Shannon limited capacity. In reality, the capacity under channel limited conditions does not meet to the Shannon limit but different error correction schemes attempt to come closer to this theoretical limit (although error correction schemes can have a trade-off between processing power/speed required for the error correction versus the gains in channel capacity). Cellular networks such as 5G normally avoid the channel limited conditions and will switch between different MCS schemes (based on the available SNR) to aim on error free transmission where possible.

The yellow shaded zone, in-between the Shannon Limit line and the actual channel capacity of a specific MCS type, denotes the inefficiency or coding overhead of the Error Correction scheme.

The first aspect of improving the SNR term is to develop new coding schemes and error correction schemes (e.g. beyond current schemes such as Turbo, LDPC, Polar) which attempt to reduce this gap whilst using minimum processing power. This represents the first area of research, to gain improved channel capacity under noise limited conditions without requiring power hungry complex decoding algorithms. As the data rates are dramatically increased, the processing ‘overhead’, the cost/complexity, and the power consumption (battery drain) of implementing the coding scheme must all be kept low. So new coding schemes for more efficient implementation are very important for 6G, with practical implementations that can deliver the 100 Gbps rates being discussed for 6G.

To optimise the channel coding schemes requires more complex channel modelling to include effects of absorption and dispersion in the channel. With more accurate models to predict how the propagation channel affects the signal, then more optimised coding and error correction schemes can be used that are more efficiently matched to the types of errors that are likely to occur.

The second aspect of the SNR term is to improve the Signal level at the receiver (increase the Signal part of the SNR) by increasing the signal strength at the transmitter (increase transmit power, Tx). We normally have an upper limit for this Tx power which is set by health and safety limits (e.g. SAR limits, human exposure risks, or electronic interference issues). But from a technology implementation viewpoint, we also have limitations in available Tx power at millimetre wave and Terahertz frequencies, especially if device size/power consumption is limited. This is due to the relatively low Power Added Efficiency (PAE) of amplifier technology at these frequencies. When we attempt to drive the amplifiers to high power, we eventually reach a saturation limit where further input power does not correspond to useful levels of increased output power (the amplifier goes into saturation). At these saturated power levels, the signal is distorted (reducing range) and the power efficiency of the amplifier is reduced (increasing power consumption).

The chart in figure 6 shows a review of the available saturated (maximum) output power versus frequency for the different semiconductor materials used for electronic circuits. We can see that power output in the range +20 to +40 dBm is commercially available up to 100 GHz. At higher frequencies we can see that available power for traditional semiconductors quickly drops off to the range -10 to +10 dBm, representing a drop of around 30 dB in available output power. The results and trend for InP show promise to provide useful power out to the higher frequencies. Traditional ‘high power’ semiconductors such as GaAs and GaN show high power out to 150 GHz but have not shown commercial scale results yet for higher frequencies. The performance of the alternative technology of Travelling Wave Tubes (TWT) is also shown in figure 6, which provides a technology to generate sufficient power at the higher frequencies. However, the cost, size, power consumption of a TWT does not make it suitable for personal cellular communications today.

For higher frequencies (above 100 GHz) existing semiconductor materials have very low power efficiency (10% PAE for example). This means that generally we have low output powers achievable using conventional techniques, and heating issues as there is a high level (90%) of ‘wasted’ power to be dissipated. This leads to new fundamental research needed in semiconductor materials and compounds for higher efficiency, and new device packaging for lower losses and improved heat management. Transporting the signals within the integrated circuits and to the antenna with low loss also becomes a critical technology issue, as a large amount of power may be lost (turned into heat) from just the transportation of the signal power from the amplifier to the antenna. So, there is a key challenge in packaging of the integrated circuits without significant loss, and in maintaining proper heat dissipation.

In addition to the device/component level packaging discussed above, a commercial product also requires consumer packaging such that the final product can be easily handled by the end user. So, this requires that plastic/composite packaging materials that give sufficient scratch, moisture, dirt, and temperature protection to the internal circuits are available. Moving to the higher frequency bands above 100 GHz, then the properties of the materials must be verified to give low transmission loss and minimal impact on beam shape/forming circuits, so that the required SNR can be maintained.

Moving up to THz range frequency results in large increase in atmospheric path-loss, as discussed earlier in this paper. Very high element count (massive) antenna arrays are a solution to compensate for the path-loss by having higher power directional beams. Designing such arrays that will operate with high efficiency at THz frequency poses many challenges, from designing the feed network and the antenna elements to support GHz-wide bandwidth. The benefit is that an array of multiple transmitters can produce a high output power more easily than having a single high-power output. The challenge is then to focus the combined power of the individual antenna elements into a single beam towards the receiver.

So, we can use beamforming antenna arrays for higher gain (more antennas to give more Tx power arriving at a receiver) to overcome the atmospheric propagation losses and reduced output power. The use of massive arrays to create high antenna gain, and the higher frequency, results in very narrow beams. It is of great importance to optimize the beamforming methods to provide high dynamic-range and high flexibility at a reasonable cost and energy consumption, as beam forming of narrow and high gain beams will be very important. These higher frequency communication links will depend on ‘Line Of Sight’ and direct-reflected paths, not on scattering and diffracting paths, as the loss of signal strength due to diffraction or scattering is likely to make signal levels too low for detection. So, along with the beam forming there needs to be beam management that enables these narrow beams to be effectively aligned and maintained as the users move within the network. Current 5G beam management uses a system of Reference Signals and UE measurements/reports to track the beams and align to be the best beam. This method can incur significant overheads in channel capacity, and for 6G there needs to be research into more advanced techniques for beam management.

The third aspect of the SNR term is to improve the noise in the receiver (to lower the Noise part of the SNR).

The receiver noise becomes an important factor in the move to wider bandwidth (increasing the B term, as discussed above), as the wider bandwidth will increase the receiver noise floor. This can be seen as both the receiver noise power increasing, and also the ‘desired signal’ power density being decreased, as the same power (e.g. +30 dBm of Tx power) of desired signal is spread across a wider bandwidth. Both factors will serve to degrade the Signal to Noise Ratio. So improving the receiver noise power will directly improve the SNR of the received signal.

The receiver noise power is made up of the inherent thermal noise power, and the active device noise power (shot noise)

from semiconductor process. By improving the performance of the semiconductor material, then lower shot noise can be achieved. In addition, a third noise type, transit time noise, occurs in semiconductor materials when they are driven above a certain cut-off frequency (fc). So, there is also interest in improving the cut-off frequency of semiconductor materials to enable them to be used efficiently at the higher frequencies of 100-400 GHz region.

The thermal noise is given by the fundamental equation:

𝑃 = 𝑘𝑇𝐵

Where P is the noise Power, k is the Boltzman constant, and T is the temperature (ºKelvin). So, it is clearly seen that increasing the bandwidth term, B, directly increases the thermal noise power. This noise is independent of the semiconductor material, and assuming a ‘room temperature’ device (i.e. not with a specific ultra-low temperature cooling system) then this noise cannot be avoided and is just increased by having wider bandwidth. So, this represents a fundamental limitation which must be accounted for in any new system design.

OFDM (multi carrier) has challenges due to requirement for low phase noise, versus single carrier systems. This may limit the efficiency of OFDM systems in Terahertz bands, as current available device technology has relatively high phase noise. The phase noise component is normally due to the requirement to have a reference ‘local oscillator’ which provides a fixed reference frequency/phase against which the received signal is compared to extract the I&Q demodulation information.

The reference oscillator is usually built from a resonator circuit and a feedback circuit, to provide a stable high-quality reference. But any noise in the feedback circuit will generate noise in the resonator output, and hence create phase noise in the reference signal that then introduces corresponding phase noise into the demodulated signal. In the Local Oscillator signal of the transmitting and receiving system, the phase noise is increased by the square of the multiplication from the reference signal. Therefore, it is necessary to take measures such as cleaning the phase noise of the reference signal before multiplication.

In Terahertz bands, the phase noise may be solved by advances in device technology and signal processing. In addition, more efficient access schemes (beyond OFDMA) are being considered for 6G. OFDMA has a benefit of flexibility for different bandwidths, and a low cost and power efficient implementation into devices. This is important to ensure it can be deployed into devices that will be affordable and have acceptable battery life (talk time). Moving to very wide bandwidth systems in 6G and expecting higher spectral efficiency (more bits/sec/Hz), then alternative access schemes are being investigated and tested. The impact of phase noise onto the performance of candidate access schemes will need to be verified to ensure feasibility of implementing the access schemes.

Measurement challenges for wireless communications in Terahertz bands.

The move to higher frequency in THz band brings the same RF device technology challenges to the test equipment. The RF performance (e.g. noise floor, sensitivity, phase noise, spurious emissions) of test equipment needs to be ensured at a level that will give reliable measurements to the required uncertainty/accuracy.

As new semiconductor compounds and processes are developed, then the semiconductor wafers need to be characterised so that the device behaviour can be accurately fed into simulations and design tools. The accuracy and reliability of these measurements is essential for good design and modelling of device behaviour when designing terahertz band devices. The principal tool for this characterisation is a Vector Network Analyser (VNA), and new generation VNA’s are now able to characterise 70KHz – 220GHz in a single sweep, using advanced probes and probe station technology to connect to the test wafers. This ‘single sweep’ approach gives the very highest level of measurement confidence and is essential for the high quality characterisation needed for next generation of device design. Figure 7 shows a VNA system configured for ‘single sweep’ 70KHz-220GHz, being used to characterise semiconductor wafer samples on a probe station. Wider bandwidth signals require a wider bandwidth receiver to capture and analyse the signal, and this will have a higher receiver noise floor. This noise floor creates ‘residual EVM’ below which a measurement system cannot measure the EVM of a captured signal. For a 5G NR system (8 x 100 MHz) this is 0.89% EVM, but for a wider bandwidth system (e.g. 10 GHz) this could be 3.2% EVM. So careful attention must be paid to the required performance and measurements for verifying the quality wide bandwidth signals. When analysing a modulated carrier signal, the very wide bandwidth creates a very low power spectral density of the signal. If the power spectral density of the received signal is comparable to the power spectral density of the receiver noise, then accurate measurement will not be possible. The dynamic range and sensitivity of test equipment also becomes a challenge at very wide bandwidths. It is usually not possible to just increase the power level of the measured signal to overcome the receiver noise floor, as the ‘total power’ in the receiver may become excessive and cause saturation/non-linear effects in the receiver.

Wider bandwidth signals require a wider bandwidth receiver to capture and analyse the signal, and this will have a higher receiver noise floor. This noise floor creates ‘residual EVM’ below which a measurement system cannot measure the EVM of a captured signal. For a 5G NR system (8 x 100 MHz) this is 0.89% EVM, but for a wider bandwidth system (e.g. 10 GHz) this could be 3.2% EVM. So careful attention must be paid to the required performance and measurements for verifying the quality wide bandwidth signals. When analysing a modulated carrier signal, the very wide bandwidth creates a very low power spectral density of the signal. If the power spectral density of the received signal is comparable to the power spectral density of the receiver noise, then accurate measurement will not be possible. The dynamic range and sensitivity of test equipment also becomes a challenge at very wide bandwidths. It is usually not possible to just increase the power level of the measured signal to overcome the receiver noise floor, as the ‘total power’ in the receiver may become excessive and cause saturation/non-linear effects in the receiver.

To overcome the possible performance limitations (e.g. dynamic range, conversion losses) then new architectures are being investigated to give optimal cost/performance in these higher frequency band and higher bandwidth test environments.

This work includes finding new Spectrum Analyser technology, and broadband VNA architectures, to enable fundamental device characterisation. An example of a 300GHz Spectrum measurement system using a new ‘pre-selector’ technology is shown in figure 8.

Radio transmitters and receivers often use frequency multipliers as converters to generate very high frequency signals from a stable reference of a low frequency. One challenge with this method is that any phase noise in the reference frequency is also multiplied by the square of the frequency multiplication factor, which can lead to high noise signals which degrade performance. In a receiver, there may also be a Sub-harmonic mixers to easily down-convert a high frequency into a more manageable lower frequency, but these sub-harmonic mixers give many undesired frequency response windows (images). Both effects represent significant challenges for test equipment, as the tester needs to have very high performance (to measure the signals of interest) and flexibility of configuration to be able to measure a wide range of devices. So new technologies, devices, and architectures to overcome these implementation challenges are being investigated for the realisation of high-performance test equipment. An example of this is the use of photonics and opto-electronic components for implementing a high frequency oscillator with low phase noise and high power, where two laser diode sources are mixed together and a resulting IF frequency is generated in the terahertz band.

During early stages of a new radio access method or new frequency band, then characterisation of the modulation/coding type and the frequency band propagation is a key research activity. This characterisation is used to help develop and verify models for coding and error correction schemes. To support this, often a “Channel Sounding” solution is used to make measurements on the frequency channel and for waveform evaluation. This channel sounder is normally composed of a complex (vector) signal source and vector signal analyser. This enables both the phase and amplitude of the channel response to be measured. Such vector transmission systems can be built from either separate Vector Signal Generator and Vector Signal Analyser, or from a combined Vector Network Analyser. This will require Vector Signal Generators and Vector Signal Analysers capable of operating up into the 300 GHz bands. Figure 9 shows a 300GHz band signal generator and spectrum analyser being used in a laboratory evaluation system. With the expected use of AI/ML in many algorithms that control the radio link (e.g. schedulers for Modulation and Coding Scheme, or MIMO pre-coding), then the ability of a network emulator to implement and reproduce these AI/ML based algorithms may become critical for characterising device performance. Currently in 3GPP these algorithm areas are not standardised and not part of the testing scope, but this is likely to change as AI/ML becomes more fundamental to the operation of the network. So, the test equipment may need the ability to implement/reproduce the AI/ML based behaviour.

With the expected use of AI/ML in many algorithms that control the radio link (e.g. schedulers for Modulation and Coding Scheme, or MIMO pre-coding), then the ability of a network emulator to implement and reproduce these AI/ML based algorithms may become critical for characterising device performance. Currently in 3GPP these algorithm areas are not standardised and not part of the testing scope, but this is likely to change as AI/ML becomes more fundamental to the operation of the network. So, the test equipment may need the ability to implement/reproduce the AI/ML based behaviour.

The move to millimetre wave (24-43 GHz) in 5G has already introduced many new challenges for ‘Over The Air’ OTA measurements. OTA is required as the antenna and Tx/Rx circuits become integrated together to provide the required low loss transceiver performance. But this integration of antenna and Tx/Rx means that there is no longer an RF test port to make RF measurements, and instead all the measurements must be made through the antenna interface. OTA measurement brings challenges in terms of equipment size (large chambers are required to isolate the test device from external signals), measurement uncertainty (the coupling through the air between test equipment and device is less repeatable), and measurement time (often the measurement must be repeated at many different incident angles to the antenna). When moving to THz band frequencies the chamber size may be reduced, but the measurement uncertainties become more demanding due to the noise floor and power limitations discussed above. So careful attention is now being paid to OTA measurement methods and uncertainties, so that test environments suitable for 6G and THz bands can be implemented.

Summary

The expected requirements for higher data rates (and higher data capacity) in a wireless cell are part of the key drivers for beyond 5G and 6G technology research. These requirements can be met with either a wider channel bandwidth (B), or an improved channel Signal to Noise Ratio (SNR). It is seen from Shannon’s equation that increasing B gives a greater return than increasing SNR, although both are relevant and of interest.

Due to the heavy use of existing frequency bands, there is a strong interest to use higher frequencies to enable more bandwidth. This is generating the interest to move to beyond 100 GHz carrier frequencies and to the Terahertz domain, where higher bandwidths (e.g. 10 GHz or more of bandwidth) can be found and could become available for commercial communications systems. The reason that these bands have not previously been used for commercial wireless systems is mainly due to propagation limits (high attenuation of signals) and cost/complexity/efficiency of semiconductor technology to implement circuits at these higher frequencies.

This requirement, and existing technology/implementation restrictions, is now driving research into the use of higher frequency bands (e.g. in the region of 100-400 GHz) and research activities in the following key topic areas:

- Channel sounding and propagation measurements, to characterise and model the propagation of wireless transmission links and to evaluate candidate access schemes such as

- Advanced MIMO systems, to additional channel capacity by using multiple spatial

- Error coding schemes to improve efficiency and approach closer to Shannon limits of SNR

- Advanced beamforming and reflector surfaces (meta-surfaces) to enable narrow beam signals to be used for high gain directional

- Device and semiconductor technology to give lower shot noise and high fc, and lower phase noise

- Semiconductor and packaging technology to give lower loss transmit modules, higher power efficiency and high output power, at the higher frequency

- Technology and packaging for integrated antenna systems suitable for both Cell Site and User equipment

In general, it is seen that there are many implementation challenges in using the frequency range 100-400 GHz. For frequencies below 100 GHz then existing RF semiconductor devices can implement the technology with acceptable size/cost/efficiency. Above 10 THz then there are optical device technologies which can also implement the required functions in an acceptable way. Currently there is this ‘Terahertz gap’, spanning the range 100 GHz to 10 THz, where the cross-over between optical/photonics and RF/electronics technologies occurs and where the new device implementation technology is being developed for commercial solutions.

In parallel, the use of AI/ML is being investigated to enhance the performance of algorithms that are used in many of the communications systems functions. This includes the areas of channel coding and error correction, MIMO, beamforming, and resource scheduling.

All the above technology themes and challenges are now being investigated by research teams and projects across the world. The results will deliver analysis and proposals into the standards making processes and Standards Developing Organisations (SDO’s) such as 3GPP, to enable the selection of technologies and waveforms for the Beyond 5G and 6G networks. Not only the theoretical capability, but the practical implications and available technology for affordable and suitable commercial solutions, are critical points for the selection of technology to be included in the standards for next generation cellular communications systems.