History

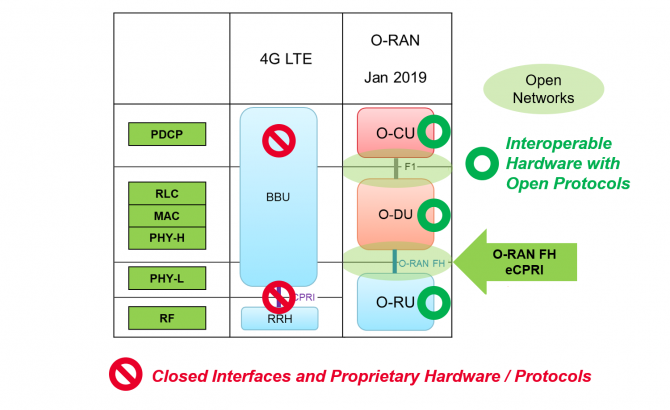

In 2G and 3G, the mobile architectures had controllers that were responsible for RAN orchestration and management. With 4G, overall network architecture became flatter and the expectation was that, to enable optimal subscriber experience, base stations would use the X2 interface to communicate with each other to handle resource allocation. This created the proverbial vendor lock-in as different RAN vendors had their own flavor of X2, and it became difficult for an MNO to have more than one RAN vendor in a particular location. The O-RAN Alliance went back to the controller concept to enable best-of-breed Open RAN.

Why

As many 5G experiences require low latency, 5G specifications like Control and User Plane Separation (CUPS), functional RAN splits and network slicing, require advanced RAN virtualization combined with SDN. This combination of virtualization (NFV and containers) and SDN is necessary to enable configuration, optimization and control of the RAN infrastructure at the edge before any aggregation points. This is how the RAN Intelligent Controller (RIC) for Open RAN was born – to enable eNB/gNB functionalities as X-Apps on northbound interfaces. Applications like mobility management, admission control, and interference management are available as apps on the controller, which enforces network policies via a southbound interface toward the radios. RIC provides advanced control functionality, which delivers increased efficiency and better radio resource management. These control functionalities leverage analytics and data-driven approaches including advanced ML/AI tools to improve resource management capabilities.

The separation of functionalities on southbound and northbound interfaces enables more efficient and cost-effective radio resource management for real-time and non-real-time functionalities as the RIC customizes network optimization for each network environment and use case.

Virtualization (NVF or containers) creates software app infrastructure and a cloud-native environment for RIC, and SDN enables those apps to orchestrate and manage networks to deliver network automation for ease of deployment.

Though originally RIC was defined for 5G OpenRAN only, the industry realizes that for network modernization scenarios with Open RAN, RIC needs to support 2G 3G 4G Open RAN in addition to 5G.

The main takeaway: RIC is a key element to enable best-of-breed Open RAN to support interoperability across different hardware (RU, servers) and software (DU/CU) components, as well as ideal resource optimization for the best subscriber QoS.

What

There are 4 groups in the O-RAN Alliance that help define RIC architecture, real-time and non-real-time functionality, what interface to use and how the elements are supposed to work with each other.

Source: O-RAN Alliance

Working group 1 looks after overall use cases and architecture across not only the architecture itself, but across all of the working groups. Working group 2 is responsible for the Non-real-time RAN Intelligent Controller and A1 Interface, with the primary goal that Non-RT RIC is to support non-real-time intelligent radio resource management, higher layer procedure optimization, policy optimization in RAN, and providing AI/ML models to near-RT RIC. Working group 3 is responsible for the Near-real-time RIC and E2 Interfaces, with the focus to define an architecture based on Near-Real-Time Radio Intelligent Controller (RIC), which enables near-real-time control and optimization of RAN elements and resources via fine-grained data collection and actions over the E2 interface. Working group 5 defines the Open F1/W1/E1/X2/Xn Interfaces to provide fully operable multi-vendor profile specifications which are compliant with 3GPP specifications.

The RAN Intelligent Controller consists of a Non-Real-time Controller (supporting tasks that require > 1s latency) and a Near-Real Time controller (latency of <1s). Non-RT functions include service and policy management, RAN analytics and model-training for the Near-RT RAN.

Near Real-Time RAN Intelligent Controller (Near-RT RIC) is a near‐real‐time, micro‐service‐based software platform for hosting micro-service-based applications called xApps. They run on the near-RT RIC platform. The near-RT RIC software platform provides xApps cloud-based infrastructure for controlling a distributed collection of RAN infrastructure (eNB, gNB, CU, DU) in an area via the O-RAN Alliance’s E2 protocol (“southbound”). As part of this software infrastructure, it also provides “northbound” interfaces for operators: the A1 and O1 interfaces to the Non-RT RIC for the management and optimization of the RAN. The self-optimization is responsible for necessary optimization-related tasks across different RANs, utilizing available RAN data from all RAN types (macros, Massive MIMO, small cells). This improves user experience and increases network resource utilization, key for consistent experience on data-intensive 5G networks.

Source: O-RAN Alliance

The Near-RT RIC hosts one or more xApps that use the E2 interface to collect near real-time information (on a UE basis or a cell basis). The Near-RT RIC control over the E2 nodes is steered via the policies and the data provided via A1 from the Non-RT RIC. The RRM functional allocation between the Near-RT RIC and the E2 node is subject to the capability of the E2 node and is controlled by the Near-RT RIC. For a function exposed in the E2 Service Model, the near-RT RIC may monitor, suspend/stop, override or control the node via Non-RT RIC enabled policies. In the event of a Near-RT RIC failure, the E2 Node will be able to provide services, but there may be an outage for certain value-added services that may only be provided using the Near-RT RIC. The O-RAN Alliance has a very active WIKI page where it posts specs and helpful tips for developers and operators that want to deploy Near-RT RIC.

Non-Real-Time RAN Intelligent Controller (Non-RT RIC) functionality includes configuration management, device management, fault management, performance management, and lifecycle management for all network elements in the network. It is similar to Element Management (EMS) and Analytics and Reporting functionalities in legacy networks. All new radio units are self-configured by the Non-RT RIC, reducing the need for manual intervention, which will be key for 5G deployments of Massive MIMO and small cells for densification. By providing timely insights into network operations, MNOs use Non-RT RIC to better understand and, as a result, better optimize the network by applying pre-determined service and policy parameters. Its functionality is internal to the SMO in the O-RAN architecture that provides the A1 interface to the Near-Real Time RIC. The primary goal of Non-RT RIC is to support intelligent RAN optimization by providing policy-based guidance, model management and enrichment information to the near-RT RIC function so that the RAN can be optimized. Non-RT RIC can use data analytics and AI/ML training/inference to determine the RAN optimization actions for which it can leverage SMO services such as data collection and provisioning services of the O-RAN nodes.

Trained models and real-time control functions produced in the Non-RT RIC are distributed to the Near-RT RIC for runtime execution. Network slicing, security and role-based Access Control and RAN sharing are key aspects that are enabled by the combined controller functions, real-time and non-real-time, across the network.

The main takeaway: Near-RT RIC is responsible for creating a software platform for a set of xApps for the RAN; non-RT RIC provides configuration, management and analytics functionality. For Open RAN deployments to be successful, both functions need to work together.

How

O-RAN defined overall RIC architecture consists of four functional software elements: DU software function, multi-RAT CU protocol stack, the near-real time RIC itself, and orchestration/NMS layer with Non-Real Time RIC. They all are deployed as VNFs or containers to distribute capacity across multiple network elements with security isolation and scalable resource allocation. They interact with RU hardware to make it run more efficiently and to be optimized real-time as a part of the RAN cluster to deliver a better network experience to end users.

Source: O-RAN Alliance

An A1 interface is used between the Orchestration/NMS layer with non-RT RIC and eNB/gNB containing near-RT RIC. Network management applications in non-RT RIC receive and act on the data from the DU and CU in a standardized format over the A1 Interface. AI-enabled policies and ML-based models generate messages in non-RT RIC and are conveyed to the near-RT RIC.

The control loops run in parallel and, depending on the use case, may or may not have any interaction with each other. The use cases for the Non-RT RIC and Near-RT RIC control loops are fully defined by O-RAN, while for the O-DU scheduler control loop – responsible for radio scheduling, HARQ, beamforming etc. – only the relevant interactions with other O-RAN nodes or functions are defined to ensure the system acts as a whole.

Multi-RAT CU protocol stack function supports protocol processing and is deployed as a VNF or a CNF. It is implemented based on the control commands from the near-RT RIC module. The current architecture uses F1/E1/X2/Xn interfaces provided by 3GPP. These interfaces can be enhanced to support multi-vendor RANs, RUs, DUs and CUs.

The Near-RT RIC leverages embedded intelligence and is responsible for per-UE controlled load-balancing, RB management, interference detection and mitigation. This provides QoS management, connectivity management and seamless handover control. Deployed as a VNF, a set of VMs, or CNF, it becomes a scalable platform to on-board third-party control applications. It leverages a Radio-Network Information Base (R-NIB) database which captures the near real-time state of the underlying network and feeds RAN data to train the AI/ML models, which are then fed to the Near-RT RIC to facilitate radio resource management for subscriber. Near-RT RIC interacts with Non-RT RIC via the A1 interface to receive the trained models and execute them to improve the network conditions.

The Near-RT RIC can be deployed in a centralized of distributed model, depending on network topology.

Source: O-RAN Alliance

Bringing it all together: Near-RT RIC provides a software platform for xAPPS for RAN management and optimization. A large amount of network and subscriber data and Big Data, counters, RAN and network statistics, and failure information are available with L1/L2/L3 protocol stacks, which are collected and used for data features and models in Non-RT RIC. Non-RT RIC acts as a configuration layer to DU and CU software as well as via the E2 standard interface. They can be learned with AI and/or abstracted to enable intelligent management and control the RAN with Near-RT RIC. Some of the example models include, but are not limited to, spectrum utilization patterns, network traffic patterns, user mobility and handover patterns, service type patterns along with the expected quality of service (QoS) prediction patterns, and RAN parameters configuration to be reused, abstracted or learned in Near-RT RIC from the data collected by Near-RT RIC.

This abstracted or learned information is then combined with additional network-wide context and policies in Near-RT RIC to enable efficient network operations via Near-RT RIC.

The main takeaway: Non-RT RIC feeds data collected from RAN elements into Near-RT RIC and provides element management and reporting. Near-RT RIC makes configuration and optimization decisions for multi-vendor RAN and uses AI to anticipate some of the necessary changes.

When

The O-RAN reference architecture enables not only next generation RAN infrastructures, but also the best of breed RAN infrastructures. The architecture is based on well-defined, standardized interfaces that are compatible with 3GPP to enable an open, interoperable RAN. RIC functionality delivers intelligence into the Open RAN network with near-RT RIC functionality providing real-time optimization for mobility and handover management, and non-RT RIC providing not only visibility into the network, but also AI-based feeds and recommendations to near-RT RIC, working together to deliver optimal network performance for optimal subscriber experience.

Recently, ATT and Nokia tested the RAN E2 interface and xApp management and control, collected live network data using the Measurement Campaign xApp, neighbor relation management using the Automated Neighbor Relation (ANR) xApp, and tested RAN control via the Admission Control xApp – all over the live commercial network.

Source: Nokia

AT&T and Nokia ran a series of xApps at the edge of AT&T’s live 5G mmWave network on an Akraino-based Open Cloud Platform. The xApps used in the trial were designed to improve spectrum efficiency, as well as offer geographical and use case-based customization and rapid feature onboarding in the RAN.

AT&T and Nokia are planning to officially release the RIC into open source, so that other companies and developers can help develop the RIC code.

Parallel Wireless is another vendor that has developed RIC, near-RT and non-RT. What makes their approach different is that the controller works not only for 5G, but also for legacy Gs: 2G, 3G, and 4G. Their xApps or microservices are virtualized functions of BSC for 2G, RNC for 3G, x2 gateway for 4G among others.

Source: Parallel Wireless

As a result of having 2G 3G 4G and 5G related xApps, 5G-like features can be delivered today to 2G, 3G, and 4G networks utilizing this RIC including: 1. Ultra-low latency and high reliability for coverage or capacity use cases. 2. Ultra-high throughput for consumer applications such as real-time gaming. 3. Scaling from millions to billions of transactions, with voice and data handling that seamlessly scales up from gigabytes to petabytes in real-time, with consistent end user experience for all types of traffic. The solution is a pre-standard near real-time RAN Intelligent Controller (RIC) and will adapt O-RAN open interfaces with the required enhancements and can be upgraded to them via a software upgrade. This will enable real-time radio resource management capabilities to be delivered as applications on the platform.

Main takeaway: The RIC platform provides a set of functions via xApps and using pre-defined interfaces that allow for increased optimizations in Near-RT RIC through policy-driven, closed loop automation, which leads to faster and more flexible service deployments and programmability within the RAN. It also helps strengthen a multi-vendor open ecosystem of interoperable components for a disaggregated and truly open RAN.

Tags: Automated Neighbor Relation (ANR), Control and User Plane Separation (CUPS), eNB, gNB, Massive MIMO, NVF, O-RAN, RAN Intelligent Controller, SMO services, X2 interface, xApps