A wireless router is the central piece of gear for a residential network. It manages network traffic between the Internet (via the modem) and a wide variety of client devices, both wired and wireless. Many of today’s consumer routers are loaded with features, incorporating wireless connectivity, switching, I/O for external storage devices as well as comprehensive security functionality. A wired switch, often taking the form of four gigabit Ethernet ports on the back of most routers, is largely standard these days. A network switch negotiates network traffic, sending data to a specific device, whereas network hubs simply retransmit data to all of the recipients. Although dedicated switches can be added to your network, most home networks don’t incorporate them as standalone appliances. Then there’s the wireless access point capability. Most wireless router models support dual bands, communicating over 2.4 and 5GHz and many are also able to connect to several networks simultaneously.

Part of trusting our always-on Internet connections is the belief that private information is protected at the router, which incorporates features to limit home network access. These security features can include a firewall, parental controls, access scheduling, guest networks and even a demilitarized zone (DMZ), referring to the military concept of a buffer zone between neighboring countries). The DMZ, also called a perimeter network, is a subnetwork where vulnerable processes like mail, Web and FTP servers can be placed so that, if it is breached, the rest of the network isn’t compromised. The firewall is a core component in today’s story. In fact, what differentiates a wireless router from a dedicated switch or wireless access point is the firewall. Although Windows has its own software-based firewall, the router’s hardware firewall forms the first line of defense in keeping malicious content off the home network. The router’s firewall works by making sure packets were actually requested by the user before allowing them to pass through to the local network.

Finally, you have peripheral connectivity like USB and eSATA. These ports make it possible to share external hard drives or even printers. They offer a convenient way to access networked storage without the need for a dedicated PC with a shared disk or NAS running 24/7.

Some Internet service providers (ISPs) integrate routers into their modems, yielding an “all-in-one” device. This is done to simplify setup, so the ISP has less hardware to support. It can also be advantageous to space-constrained customers. However, in general, these integrated routers do not get firmware updates as frequently, and they’re often not as robust as stand-alone routers. An example of a combo modem/router is Netgear’s Nighthawk AC1900 Wi-Fi cable modem router. In addition to its 802.11ac wireless connectivity, it offers a DOCSIS 3.0 24 x 8 broadband cable modem.

DOCSIS stands for “data over cable service interface specifications,” and version 3.0 is the current cable modem spec. DOCSIS 1.0 and 2.0 defines a single channel for data transfers, while DOCSIS 3.0 specifies the use of multiple channels to allow for faster speeds. Current DOCSIS 3.0 modems commonly use 8, 12 or 16 channels, with 24-channel modems also available. Each channel offers a theoretical maximum download speed of 38 Mb/s and a maximum upload speed of 27 Mb/s. The standard’s next update, DOCSIS 3.1, promises to offer download speeds of up to 10 Gb/s and upload speeds of up to 1 Gb/s.

MORE: All Networking Content

MORE: Networking in the Forums

Wi-Fi Standards

The oldest wireless routers supported 802.11b, which worked on the 2.4GHz band and topped out at 11 Mb/s. This original Wi-Fi standard was approved in 1999, hence the name 802.11b-1999 (later it was shortened to 802.11b).

Another early Wi-Fi standard was 802.11a, also ratified by the IEEE in 1999. It operated on the less congested 5GHz band and maxed out at 54 Mb/s, although real-world throughput was closer to half that number. Given a shorter wavelength than 2.4GHz, the range of 802.11a was shorter, which may have contributed to less uptake. While 802.11a enjoyed popularity in some enterprise applications, it was largely eclipsed by the more pervasive 802.11b in homes and small businesses. Notably, 802.11a’s 5GHz band became part of later standards.

Eventually, 802.11b was replaced by 802.11g on the 2.4GHz band, upping throughput to 54 Mb/s. It all makes for an interesting history lesson, but if your wireless equipment is old enough for that information to be relevant, it’s time to consider an upgrade.

802.11n

In the fall of 2009, 802.11n was ratified, paving the way for one device to operate on both the 2.4GHz and 5GHz bands. Speeds topped out at 600 Mb/s. With N600 and N900 gear, two separate service set identifiers (SSIDs) were transmitted—one on 2.4GHz and the other on 5GHz—while less expensive N150 and N300 routers cut costs by transmitting only on the 2.4GHz band.

Wireless N networking introduced an important advancement called MIMO, an acronym for “multiple input/multiple output.” This technology divides the data stream between multiple antennas. We’ll go into more depth on MIMO shortly.

If you’re satisfied with the performance of your N wireless gear, then hold onto it for now. After all, it does still exceed the maximum throughput offered by most ISPs. Here are some examples of available 802.11n product speeds:

| Type |

2.4GHz (Mb/s) |

5GHz (Mb/s) |

| N150 |

150 |

N/A |

| N300 |

300 |

N/A |

| N600 |

300 |

300 |

| N900 |

450 |

450 |

802.11ac

The 802.11ac standard, also known as Wireless AC, was released in January 2014. It broadcasts and receives on both the 2.4GHz and 5GHz bands, but the 2.4GHz frequency on an 802.11ac router is really a carryover of 802.11n. That older standard maxed out at 150 Mb/s on each spatial stream, with up to four simultaneous streams, for a total throughput of 600 Mb/s.

In 802.11ac MIMO was also refined with increased channel bandwidth and support for up to eight spatial streams. Beamforming was introduced with Wireless N gear, but it was proprietary, and with AC, it was standardized to work across different manufacturers’ products. Beamforming is a technology designed to optimize the transmission of Wi-Fi around obstacles by using the antennas to direct and focus the transmission to where it is needed.

With 802.11ac firmly established as the current Wi-Fi standard, enthusiasts shopping for routers should consider one of these devices, as they offer a host of improvements over N gear. Here are some examples of available 802.11ac product speeds:

| Type |

2.4GHz (Mb/s) |

5GHz (Mb/s) |

| AC600 |

150 |

433 |

| AC750 |

300 |

433 |

| AC1000 |

300 |

650 |

| AC1200 |

300 |

867 |

| AC1600 |

300 |

1300 |

| AC1750 |

450 |

1300 |

| AC1900 |

600 |

1300 |

| AC3200 |

600 |

1300, 1300 |

The maximum throughput achieved is the same on AC1900 and AC3200 for both the 2.4GHz and 5GHz bands. The difference is that AC3200 can transmit two simultaneous 5GHz networks to achieve such a high total throughput.

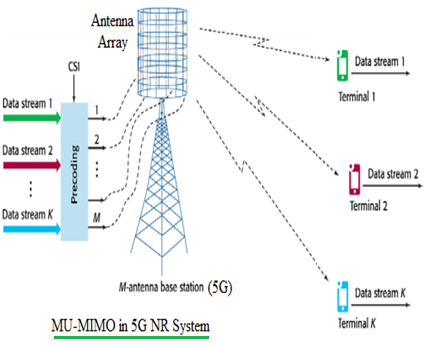

The latest wireless standard with products currently hitting the market is 802.11ac Wave 2. It implements multiple-user, multiple-input, multiple-output, popularly referred to as MU-MIMO. In broad terms, this technology provides dedicated bandwidth to more devices than was previously possible.

Wi-Fi Features

SU-MIMO And MU-MIMO

Multiple-input and multiple-output (MIMO), first seen on 802.11n devices, takes advantage of a radio phenomenon known as multipath propagation, which increases the range and speed of Wi-Fi. Multipath propagation is based on the ability of a radio signal to take slightly different pathways between the router and client, including bouncing off intervening objects as well as floors and ceilings. With multiple antennas on both the router as well as the client—and provided they both support MIMO—then using antenna diversity can combine simultaneous data streams to increase throughput.

When MIMO was originally implemented, it was SU-MIMO, designed for a Single User. In SU-MIMO, all of the router’s bandwidth is devoted to a single client, maximizing throughput to that one device. While this is certainly useful, today’s routers communicate with multiple clients at one time, limiting the SU-MIMO’s technology’s utility.

The next step in MIMO’s evolution is MU-MIMO, which stands for Multiple User-MIMO. Whereas SU-MIMO was restricted to a single client, MU-MIMO can now extend the benefit to up to four. The first MU-MIMO router released, the Linksys EA8500, features four external antennas that facilitate MU-MIMO technology allowing the router to provide four simultaneous continuous data streams to clients.

Before MU-MIMO, a Wi-Fi network was the equivalent of a wired network connected through a hub. This was inefficient; a lot of bandwidth is wasted when data is sent to clients that don’t need it. With MU-MIMO, the wireless network becomes the equivalent of a wired network controlled by a switch. With data transmission able to occur simultaneously across multiple channels, it is significantly faster, and the next client can “talk” sooner. Therefore, just as the transition from hub to switch was a huge leap forward for wired networks, so will MU-MIMO be for wireless technology.

Beamforming

Beamforming was originally implemented in 802.11n, but was not standardized between routers and clients; it essentially did not work between different manufacturers’ products. This was rectified with 802.11ac, and now beamforming works across different manufacturers’ gear.

What beamforming does is, rather than have the router transmit its Wi-Fi signal in all directions, it allows the router to focus the signal to where it is needed to increase its strength. Using light as an analogy, beamforming takes the camping lantern and turns it into a flashlight that focuses its beam. In some cases, the Wi-Fi client can also support beamforming to focus the signal of the client back to the router.

While beamforming is implemented in 802.11ac, manufacturers are still allowed to innovate in their own way. For example, Netgear offers Beamforming+ in some of its devices, which enhances throughput and range between the router and client when they are both Netgear products and support Beamforming+.

Other Wi-Fi Features

When folks visit your house, they often want to jump on your wireless network, whether to save on cellular data costs or to connect a notebook/tablet. Rather than hand out your Wi-Fi password, try configuring a Guest Network. This facilitates access to network bandwidth, while keeping guests off of other networked resources. In a way, the Guest Network is a security feature, and feature-rich routers offer this option.

Another feature to look for is QoS, which stands for Quality of Service. This capability serves to prioritize network traffic from the router to a client. It’s particularly useful in situations where a continuous data stream is required; for example, with services like Netflix or multi-player games. In fact, routers advertised as gaming-optimized typically include provisions for QoS, though you can find the functionality on non-gaming routers as well.

Another option is Parental Control, which allows you to act as an administrator for the network, controlling your child’s Internet access. The limits can include blocking certain websites, as well as shutting down network access at bedtime.

Wireless Router Security

There are two types of firewalls: hardware and software. Microsoft’s Windows operating system has a software firewall built into it. Third-party firewalls can be installed as well. Unfortunately, these only protect the device they’re installed on. While they’re an essential part of a Windows-based PC, the rest of your network is otherwise exposed.

An essential function of the router is its hardware firewall, known as a network perimeter firewall. The router serves to block incoming traffic that was not requested, thereby operating as an initial line of defense. In an enterprise setup, the hardware firewall is a dedicated box; in a residential router, it’s integrated.

A router is also designed to look for the address source in packets traveling over the network, relating them to address requests. When the packets aren’t requested, the firewall rejects them. In addition, a router can apply filtering policies, using rules to allow and restrict packets before they traverse the home network. The rules consider the source of a packet’s IP address and its destination. Moreover, packets are matched to the port they should be on. This is all done at the router to keep unwanted data off the home network.

The wireless router is responsible for the Wi-Fi signal’s security, too. There are various protocols for this, including WEP, WPA and WPA2. WEP, which stands for Wired Equivalent Privacy, is the oldest standard, dating back to 1999. It uses 64-bit, and subsequently 128-bit encryption. As a result of its fixed key, WEP is widely considered quite insecure. Back in 2005, the FBI showed how WEP could be broken in minutes using publicly available software.

WEP was supplanted by WPA (Wi-Fi Protected Access) featuring 256-bit encryption. Addressing the significant shortcoming of WEP, a fixed key, WPA’s improvement was based on the Temporal Key Integrity Program (TKIP). This security protocol uses a per-packet key system that offers a significant upgrade over WEP. WPA for home routers is implemented as WPA-PSK, which uses a pre-shared key (PSK, better known as the Wi-Fi password that folks tend to lose and forget). While the security of WPA-PSK via TKIP was definitely better than WEP, it also proved vulnerable to attack and is not considered secure.

Introduced in 2006, WPA2 (Wi-Fi Protected Access 2) is the more robust security specification. Like its predecessor, WPA2 uses a pre-shared key. However, unlike WPA’s TKIP, WPA2 utilizes AES (Advanced Encryption Standard), a standard approved by the NSA for use with top secret information.

Any modern router will support all of these security standards for the purpose of compatibility, as none of them are new, but ideally, you want to configure your router to employ WPA2/AES. There is no WPA3 on the horizon because WPA2 is still considered secure. However, there are published methods for compromising it, so accept that no network is impenetrable.

All of these Wi-Fi security standards rely on your choice of a strong password. It used to be that an eight-character sequence was considered sufficient. But given the compute power available today (particularly from GPUs), even longer passwords are sometimes recommended. Use a combination of numbers, uppercase and lowercase letters, and special characters. The password should also avoid dictionary words or easy substitutions, such as “p@$$word,” or simple additions—for example, “password123” or “passwordabc.”

While most enthusiasts know to change the router’s Wi-Fi password from its factory default, not everyone knows to change the router’s admin password, thus inviting anyone to come along and manipulate the router’s settings. Use a different password for the Wi-Fi network and router log-in page.

In the event that you lose your password, don’t fret. Simply reset the router to its factory state, reverting the log-in information to its default. Manufacturers have different methods for doing this, but many routers have a physical reset button, usually located on the rear of the device. After resetting, all custom settings are lost, and you’ll need to set a new password.

Wi-Fi Protected Setup (WPS) is another popular feature on higher-end routers. Rather than manually typing in a password, WPS lets you press a button on the router and adapter, triggering a brief discovery period. Another approach is the WPS PIN method, which facilitates discovery through the entry of a short code on either the router or client. It’s vulnerable to brute-force attack, though, so many enthusiasts recommend simply disabling WPS altogether.

Software

Web And Mobile Interfaces

Wireless routers are typically controlled through a software interface built into their firmware, which can be accessed through the router’s network address. Through this interface you can enable the router’s features, define the parameters and configure security settings. Routers employ a variety of custom operating environments, though most are Web-based. Some manufacturers do offer smartphone-enabled apps for iOS and Android, too. Here’s is an example of a software interface for the Netis WF2780, seen on a Windows desktop. While not easy to use for amateurs, it does allow for control over all the settings. Here we can see the Bandwidth Control Configuration in the Advanced Settings.

Routers offer a wide range of features, and each vendor has its own set of unique capabilities. Overall, though, they do share generally similar feature sets, including:

- Quick Setup: For the less experienced user, Quick Setup is quite useful. This gets the device up and running with pre-configured settings, and does not require advanced networking knowledge. Of course, experienced users will want more control.

- Wireless Configuration: This setting allows channel configuration. In some cases, the router’s power can be adjusted, depending on the application. Finally, the RF bandwidth can be selected as well. Analogous settings for 5GHz are available on a separate page.

- Guest Network: The router software will provide the option to set up a separate Guest Network. This has the advantage of allowing visitors to use your Internet, without getting access to the entire network.

- Security: This is where the SSIDs for each of the configured networks, as well as their passwords, can be configured.

- Bandwidth Control: Since there is limited bandwidth, it can be controlled to provide the best experience for all (or at least the one who pays the bills). The amount of bandwidth that any user has, both on the download and upload sides, can be limited so one user does not monopolize all the bandwidth.

- System Tools: Using this collection of tools, the router’s firmware can be upgraded and the time settings specified. This also provides a log of sites visited and stats on bandwidth used.

Here is a screenshot of a mobile app called QRSMobile for Android, which can simplify the setup of a wireless router, in this case the D-Link 820L.

This screenshot shows the smartphone app for the Google OnHub.

Open-Source Firmware

Historically, some of these vendor-provided software interfaces did not allow full control of all possible settings. Out of frustration, a community for open source router firmware development took shape. One popular example of its work is DD-WRT, which can be applied to a significant number of routers, letting you tinker with options in a granular fashion. In fact, some manufacturers even sell routers with DD-WRT installed. The AirStation Extreme AC 1750 is one such model.

Another advantage of open firmware is that you’re not at the mercy of a vendor in between updates. Older products don’t receive much attention, but DD-WRT is a constant work in progress. Other open source firmware projects in this space include OpenWRT and Tomato, but be mindful that not all routers support open firmware.

Hardware

System Board Components

Inside a wireless router is a purpose-built system, complete with a processor, memory, power circuitry and a printed circuit board. These are all proprietary components, with closed specifications, and are not upgradeable.

The above image shows the internals of Netis’ N300 Gaming Router (WF2631). We see the following components:

- Status LEDs that indicate network/router activity

- Heat sink for the processor—these CPUs don’t use much power, and are cooled without a fan

- Antenna leads for the three external antennas to connect to the PCB

- Four Ethernet LAN ports for the home network

- WPS Button

- Ethernet WAN port that connects to a provider’s modem

- Power jack

- Factory reset button

- 10/100BASE-TX transformer modules — these support the RJ45 connectors, which are the Ethernet ports.

- 100 Base-T dual-port through-hole magnetics. These are designed for IEEE802.3u (Ethernet ports).

- Memory chip (DRAM)

Antenna Types

As routers send and receive data across the 2.4 and 5GHz bands, they need antennas. There are multiple antenna choices: external versus internal designs, routers with one antenna and others with several. If a single antenna is good, then more must be better, right? And this is the current trend, with flagship routers like the Nighthawk X6 Tri-Band Wi-Fi Router featuring as many as six antennas, which can each be fine-tuned in terms of positioning to optimize performance. A setup like that facilitates three simultaneous network signals: one 2.4GHz and two 5GHz.

While a router with an internal antenna might look sleeker, these designs are built to blend into a living area. The range and throughput of external antennas are typically superior. They also have the advantages of reaching up to a higher position, operating at a greater distance from the router’s electronics, reducing interference, and offering some degree of configurability to tune signal transmission. This makes a better argument for function over form.

The more antennas you see on a router, the more transmit and receive radios there are, corresponding to the number of supported spatial streams. For example, a 3×3 router employs three antennas and handles three simultaneous spatial streams. Using current standards, these additional spatial streams account for much of how performance is multiplied. The Netis N300 router, pictured on the left, features three external antennae for better signal strength.

Ethernet Ports

While the wireless aspect of a wireless router gets most of the attention, a majority also enable wired connectivity. A popular configuration is one WAN port for connecting to an externally-facing modem and four LAN ports for attaching local devices.

The LAN ports top out at either 100 Mb/s or 1 Gb/s, also referred to as gigabit Ethernet or GbE. While older hardware can still be found with 10/100 ports, the faster 10/100/1000 ports are preferred to avoid bottlenecking wired transfer speeds over category 5e or 6 cables. If you have the choice between a physical or wireless connection, go the wired route. It’s more secure and frees up wireless bandwidth for other devices.

While four Ethernet ports on consumer-oriented routers is standard, certain manufacturers are changing things up. For example, the TP-Link/Google OnHub router only has one Ethernet port. This could be the start of a trend toward slimmer profiles at the expense of expansion. The OnHub router, pictured on the right, features a profile designed to be displayed, and not hidden in a closet, but this comes at the expense of external antennas, and the router has only a single Ethernet port. Asus’ RT-AC88U goes the other direction,incorporating eight Ethernet ports.

USB Ports

Some routers come with one or two USB ports. It is still common to find second-gen ports capable of speeds of up to 480 Mb/s (60 MB/s). Higher-end models implement USB 3.0, though. Though they cost more, the third-gen spec is capable 5 Gb/s (640 MB/s). The D-Link DIR-820L features a rear-mounted USB port. Also seen are the four LAN ports, as well as the Internet connection input (WAN).

One intended use of USB ports is to connect storage. All of them support flash drives; however, some routers output enough current for external enclosures with mechanical disks. If you don’t need a ton of capacity, you can use a feature like that to create an integrated NAS appliance. In some models, the storage is only accessible over a home network. In other cases, you can reach it remotely.

The other application of USB on a router is shared printing. Networked printers make it easy to consolidate to just one peripheral. Many new printers do come with Wi-Fi controllers built-in. But for those that don’t, it’s easy to run a USB cable from the device to your router and share it across the network. Just keep in mind that you might lose certain features if you hook your printer up to a router. For instance, you might not see warnings about low ink levels or paper jams.

Conclusion

The Future Of Wi-Fi

Wireless routers continue to evolve as Wi-Fi standards get ratified and implemented. One rapidly expanding area is the Connected Home space, with devices like thermostats, fire alarms, front door locks, lights and security cameras all piping in to the Internet. Some of these devices connect directly to the router, while others connect to a hub device—for example, the SmartThings Hub, which then connects to the router.

One upcoming standard is known as 802.11ad, also referred to as WiGig. Actual products based on the technology are just starting to appear. It operates on the 60GHz spectrum, which promises high bandwidth across short distances. Think of it akin to Bluetooth with a roughly 10 meter range, but performance on steroids. Look for docking stations without wires and 802.11ad as a protocol for linking our smartphones and desktops.

Used in the enterprise segment, 802.11k and 802.11r are being developed for the consumer market. The home networking industry plans to address the problem of using multiple access points to deal with Wi-Fi dead spots, and the trouble client devices have with hand-offs between multiple APs. 802.11k allows client devices to track APs for where they weaken, and 802.11r brings Fast Basic Service Set Transition (F-BSST) to facilitate authentication with APs. When 802.11k and 802.11r are combined, they will enable a technology known as Seamless Roaming. Seamless Roaming will facilitate client handoffs between routers and access points.

Beyond that will be 802.11ah, which is being developed to use on the 900MHz band. It is a low-bandwidth frequency, but is expected to double the range of 2.4GHz transmissions with the added benefit of low power. The envisioned application of it is connecting Internet of Things (IoT) devices.

Out on the distant horizon is 802.11ax, which is tentatively expected to roll out in 2019 (although remember that 802.11n and 802.11ac were years late). While the standard is still being worked on, its goal is 10 Gb/s throughput. The 802.11ax standard will focus on increasing speeds to individual devices by slicing up the frequency into smaller segments. This will be done via MIMO-OFDA, which stands for multiple-input, multiple-output orthogonal frequency division multiplexing, which will incorporate new standards to pack additional data into the 5GHz data stream.

What To Look For In A Router

Choosing a router can get complicated. You have tons of choices across a range of price points. You’ll want to evaluate your needs and consider variables like the speed of your Internet connection, the devices you intend to connect and the features you anticipate using. My own personal recommendation would be to look for a minimum wireless rating of AC1200, USB connectivity and management through a smartphone app.

Netis’ WF2780 Wireless AC1200 offers an inexpensive way to get plenty of wireless performance at an extremely low price. While it lacks USB, you do get four external antennas (two for 2.4GHz and two for 5GHz), four gigabit Ethernet ports and the flexibility to use this device as a router, access point or repeater. Certain features are notably missing, but at under $60, this is an entry-level upgrade that most can afford.

Moving up to the mid-range, we find the TP-Link Archer C9. It features AC1900 wireless capable of 600 Mb/s on the 2.4GHz band and 1300 Mb/s on the 5GHz band. It has three antennas and a pair of USB ports, one of which is USB 3.0. There’s a 1GHz dual-core processor at the router’s heart and a TP-Link Tether smartphone app to ease setup and management. You’ll find the device for $130.

At the top end of the market is AC3200 wireless. There are several routers in this tier, including D-Link’s AC3200 Ultra Wi-Fi Router (DIR-890L/R). It features Tri-Band technology, which supports a 2.4GHz network at 600 Mb/s and two 5GHz networks at 1300 Mb/s. To accomplish this, it has a dual-core processor and no less than six antennas. There’s also an available app for network management, dual USB ports and GbE wired connectivity. The Smart Connect feature can dynamically balance the wireless clients among the available bands to optimize performance and prevent older devices from slowing down the rest of the network. Plus, this router has the aesthetics of a stealth destroyer and the red metallic paint job of a sports car! Such specs do not come cheap; expect to pay $300.

Conclusion

Wireless routers are assuming an ever-important role as the centerpiece of a residential home network. With the increasing need for multiple, simultaneous continuous data streams, robust throughput is no longer a nice feature, but rather a necessity. This becomes even more imperative as streaming 4K video moves from a high-end niche into the mainstream. By taking into consideration such factors as the data load as well as the number of simultaneous users, enthusiasts shopping for wireless routers will get the help they need to choose the router that best fits their needs and budget.

MORE: All Networking Content

MORE: Networking in the Forums

Source: http://www.tomshardware.com/reviews/wireless-routers-101,4456.html

Tags: 802.11ac, 802.11n, Beamforming, MU-MIMO, SU-MIMO, USB, WEP, WPA, WPS