We seem never to get tired of demanding for more when it comes to technology. We want to download more contents and watch more videos. Most of you may also want to send more files or assignments to your colleagues in a short time span. Guess what? Now you can do all these things only in a matter of a few seconds.

All hail to 5G! Verizon rolled out 5G in four US countries in October 2018. According to studies, 5G is supposed to be 200 times faster than 4G LTE. That means you don’t have to wait for any information to reach your devices. In other words, you may not have to rely on cloud computing for data transfer anymore.

From mobile phones to computers and laptops, we use multiple products every day that rely on cloud computing. From uploading files on Dropbox to working remotely from home, the Cloud has made our lives way easier since the early 2000s.

Now, 5G is the next BIG thing on the Internet. It is, in fact, considered as the next powerful tech driver in 2020 and beyond. Let’s see how 5G can disrupt Cloud Computing in a few years.

Buffering is going to be a thing of the past

There are almost 5 billion people who use smartphones on a daily basis. Whether you choose to watch a live video or listen to online music, cloud computing is the network that your smartphones rely on. Cloud computing puts the burden of your network on your Internet connection. Thus, you can expect persistent hours of buffering if your Internet connection is slow. By the time the video starts to load, your favourite cricket match will most possibly end.

5G, on the other hand, offers a peak speed of almost 5-12 megabits per second. That means you can download 200GB within a matter of seconds! And buffering is something you can bid adieu to. You don’t have to wait for web pages to load or videos to start. This will bring insurmountable pressure on cloud computing to make more contents available on the network within a short time span.

Lower Latency will rule

Latency is the time required to load contents of a web page after clicking on its link. It is the time taken by two devices to respond to one another. Cloud computing is associated with high latency challenges, along with unpredictable internet performance.

This is why many production applications consider the public cloud technology unsuitable for their niche of business. The high latency issue in cloud computing has always been proved detrimental for different nature of businesses.

The latency rate of 5G will be as low as one millisecond. 5G will permanently kill high Latency and provide results instantly. Things that depend on speed, such as, remote surgery, will also gain momentum due to the advent of 5G. Other business models such as autonomous cars, smart lamps and package delivering drones will also happen due to 5G. All in all, you will do pretty great without using cloud computing anymore as a computing platform.

Energy efficiency will increase to a huge extent

Energy utilisation is one of the major challenges faced by Cloud Computing, which lets you access data from a centralised pool of resources. The data centre in cloud computing consists of multiple servers, air conditioners, cables and network. These consume a lot of power and release a considerable amount of carbon dioxide to the environment. A new concept is known as green cloud computing, has been brought forth to curb this issue.

5G provides almost 100X higher data transmission rate than the 4G or cloud computing. This high transmission rate urges the data centres to introduce resource-intensive data operations without compromising with energy consumption. Thus, the right use of 5G will eliminate the environmental problems caused by cloud computing. Also, the former will consume less energy and deliver higher speeds. Isn’t that what we want?

No shortage of storage requirements

Data storage in the Cloud is often held offsite by a company which is not under your control. Thus, you can’t customise your data storage set-up as well. This has always been an issue for large scaled businesses who have complex storage needs. You can’t access your stored data remotely if you don’t have any access to the Internet. Also, it is difficult to migrate your data from one cloud service provider to another one. Medium to large scale businesses is unable to store massive amounts of data with one Cloud provider.

As mentioned earlier, 5G promises to satisfy the need for X times more content in the online market. With an increase in the number of contents, the need for larger storage space will also increase. Applications such as smartphones will require more data storage to download larger files at the speed of 5G networks. This will again put pressure on Cloud computing technologies to accommodate more data storage capacities in different devices.

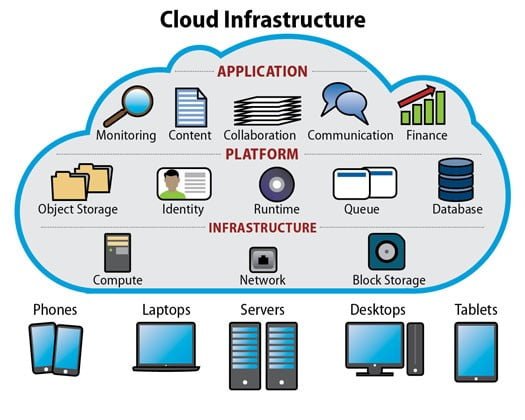

There will be a tweak in the infrastructure

Advanced technologies such as Artificial Intelligence and AR/VR have enhanced the flow of engaging and highly robust user-experience in the Cloud computing environment. This led the data centres in the Cloud to improve their infrastructure and processes to tackle such high-end content and technologies. 5G technology is supposed to have a similar effect on Cloud computing. The Cloud may have to invest a large sum of money in changing its infrastructure as it did due to AI.

5G Technology is said to make data centres and other networking companies invest around $326 billion by the year 2025. This amount is almost 56% of their total expenses. A new infrastructure rollout in the Cloud is more expensive than the introduction of the new electric grid or the national highway system. It can transform the whole American economy as well.

Wrapping Up,

5G is already being put to use in four different countries by the Verizon. With the steady progress, 5G is all set to make some groundbreaking changes in the way we live, transfer data and use the Cloud. With a speed of 200GB/second, 5G is most likely to be a boon for all the business owners irrespective of the business’s size. However, as far as the facts show, 5G has the potential to eliminate Cloud Computing forever.

Source: https://www.techiexpert.com/5-ways-5g-will-disrupt-cloud-computing/

SAN FRANCISCO:With an eye on the growth

SAN FRANCISCO:With an eye on the growth

General structure of the base matrix used in the quasi-cyclic LDPC codes selected for the data channel in NR.

General structure of the base matrix used in the quasi-cyclic LDPC codes selected for the data channel in NR.